Notice:

This post is older than 5 years – the content might be outdated.

With Kubernetes gaining more and more popularity it was only a matter of time until Microsoft brought it to their platform. This March Kubernetes announced production-level support for Windows nodes with version 1.14. But does Kubernetes on Windows actually work as advertised? How is it different? And is it even useful? In this blogpost, I want to try to shed some light on these questions.

Timeline

To get a feeling of what to expect and to put the work Microsoft has done into perspective, I want to give a quick overview of the timeline. The project started with an alpha release for Kubernetes version 1.5 in December 2016. At this point it was nothing more than a proof of concept, an experiment to see if it was possible to run kubelet and kube-proxy on a Windows node. Then, a year later, the project graduated to beta with Kubernetes version 1.9. This time they added a lot of functionality, especially regarding container networking but also more general enhancements. This included improvements to cloud providers, enabling volume support, and support for adding Windows nodes.

And then, finally, the stable release in March 2019 with Kubernetes version 1.14 which enabled production-level Windows nodes.

Windows Containers

To start this off, I want to quickly introduce Windows Containers. If you know conventional Linux containers already, well, Windows Containers are not much different.

If you’ve never heard of containers you can think of them as little boxes that contain your application, a runtime, dependencies, and everything else your application needs.

Containers are a way to isolate the software running inside of them from the host operating system, so this box will be able to reliably run in many different environments.

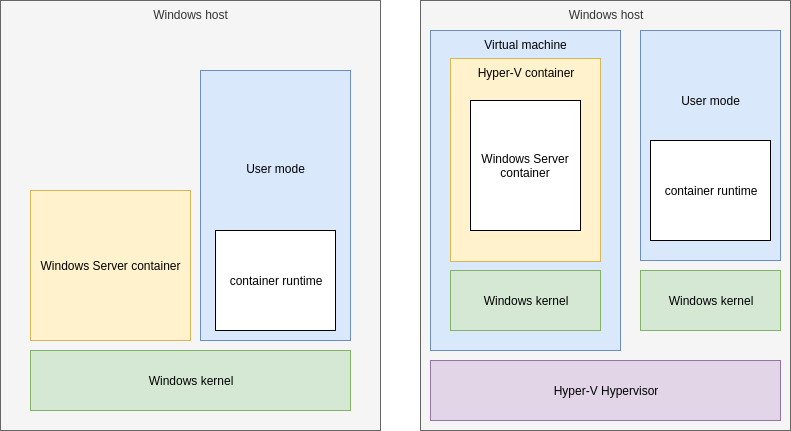

On Windows, there are two types: Windows Server containers and Hyper-V containers. Windows Server containers provide isolation through process and namespace isolation. However, they still share the kernel with the container host and all other containers. This is similar to how Linux does it.

With Hyper-V isolation you can visualize your containers as little lightweight virtual machines. Containers don’t have to share the kernel with the container host or other containers.

Kubernetes currently only supports Windows Server containers. Consequently, there are strict compatibility rules where the host OS version must match the container base image OS version. This limits the available host OS versions to Windows Server 1809/Windows Server 2019 and newer. This will change when Kubernetes supports Hyper-V containers.

What is Different, what Stays the Same?

There are still a few limitations on Windows stemming from the fundamental differences between the two operating systems.

There is currently no way to run privileged containers on Windows. This has ramifications for a couple of different use cases like Container Network Interface (CNI) plugins that rely on agents running in privileged daemon sets. Or CSI drivers that need to make syscalls to the OS as well as mount volumes accessible to other host processes. Both things you can’t do without privileged containers.

Another area that Windows handles differently is how Docker calls the underlying containerization primitives (jobs, namespaces, etc.). Linux exposes each functionality independently, whereas Microsoft introduces two APIs (Host Compute Service (HCS) & Host Networking Service (HNS)) to act as a layer of abstraction. The HCS is responsible for creating containers while the HNS is responsible for creating a network and setting up the network endpoints.

On Linux, you configure your network through file mappings. This is not possible on Windows where the configuration data is stored in the Windows registry database. This database is unique for each container, therefore simple file mappings won’t work. CNI implementations need to call the HNS instead. This is the reason that there are currently so few CNI plugins available. You can either use Microsoft’s own Azure-CNI, which is only available on Azure, ovn-kubernetes, which is used by Open Virtual Network, and flannel, the recommended CNI for everything else.

And if you were hoping that you could completely forego the use of Linux I’m sorry to tell you that you won’t—the entire master plane, meaning kube-apiserver, kube-controller-manager, and kube-scheduler continues to run on Linux only. There are no plans to have a Windows-only cluster.

But fortunately, controlling Windows containers with kubectl and using the API is essentially the same as on Linux. If you already know your way around Kubernetes this will not be a big jump for you. In fact, you can have the best of both worlds by deploying a mixed cluster with both Linux nodes and Windows nodes.

If you want a more detailed account of what works and what doesn’t, have a look at the Kubernetes docs.

How to Create a Windows Cluster

At the time of writing, you have three options to choose from: You can either choose the you-don’t-have-to-do-anything-at-all approach and use Microsoft’s fully managed Azure Kubernetes Service. With this, you’ll have your cluster up and running in less than 10 minutes. The downside is that there’s not much to configure and Windows nodes are actually just a preview feature so there are additional limitations.

Another option is to use the AKS Engine. AKS Engine is an open-source tool that allows you to quickly bootstrap a Kubernetes cluster on Azure (AKS Engine is also the library used by Azure Kubernetes Service, hence the name). It uses the Azure Resource Manager to deploy all the resources you specified beforehand. It also gives you more freedom to configure the cluster how you want it.

The last approach would be to set it up yourself. With a normal cluster you can just use kubeadm to both bootstrap the master node and join all your worker nodes. This is not yet possible on Windows. While you can still use kubeadm for master nodes, creating worker nodes is a little more complicated than that.

SIG-Windows provides Powershell scripts that help install some of the necessary things. Then the scripts install dockerd, the process Docker uses to manage containers, and also sets up a pause image. A pause image is used to set up the initial network namespace for a pod. After that, it installs and starts the kubelet first, then the CNI plugin (flannel), and finally the kube-proxy. It creates a Windows Service for every binary and starts said service. During the installation of the CNI plugin, it additionally calls the HNS API to create a network.

This is bound to get easier in the future. In fact, SIG-Windows plans to release a working kubeadm implementation in Kubernetes version 1.16.

Use Cases for Kubernetes on Windows

Windows Server and .NET still host a large percentage of enterprise workloads in the business world. And to be able to easily shift your applications to containers without changing the operating system and all the work that comes along with that is a huge advantage. You don’t have to worry about Windows dependencies anymore. Before you had to be careful since .NET Core does not include all the functionality .NET does.

Additionally, companies with a Windows server infrastructure will want to continue operating Windows servers. They do not want to interrupt their workflow with a new server operating system on top of Kubernetes. Just be aware that you need the earliest supported versions are Windows Server 1809 / Windows Server 2019.

But does it Work?

There has obviously been done a lot of work to bring Kubernetes to Windows. I started with this project in March 2019, just weeks before the release of Kubernetes version 1.14 and thus stable support for Windows nodes. Before 1.14, it was still very clunky. Pods couldn’t communicate half of the time, service discovery was rather buggy, and it just didn’t feel good to work with.

But with the stable release, Microsoft smoothed out a lot of the issues. And when everything is set up the system makes a rather stable impression. The core concepts work or have a usable workaround. The hard part is actually setting everything up and discovering all the little differences, limitations, and problems that still occur. This is largely because of an incomplete documentation. Sometimes parts of the documentation were only available on a private GitHub repository of a SIG-Windows member. Other times a hyperlink from the official documentation was dead. These were often bigger problems than the software itself. You encounter this most often when using the DIY approach because you have to do the most work yourself.

At this point, I want to highlight the SIG-Windows channel on the official Kubernetes Slack workspace. If you need help with anything, they are happy to help you over there. If you need help setting up your container orchestration, have a look at our cloud service offerings.

In conclusion, whether or not Kubernetes on Windows is useful to you really depends on your specific use case. But it’s nice to have that option now.