Notice:

This post is older than 5 years – the content might be outdated.

Since its commercial launch about two years ago, Pepper the Robot has conquered so diverse spaces around the globe that a day passing by without Pepper in the press is becoming unusual. These humanoid robots developed by Softbank Robotics and programmed by an ecosystem of partners from all around Europe welcome guests, answer questions, help find the way through facilities and locate products, inform, assist, make reservations, take orders in restaurants and entertain the young and the elderly, to mention some real use-cases currently out there. Each Pepper is programmed with its own personality (robotality?) and a different set of abilities for a specific use-case.

Designed to make the interaction with human beings as natural and intuitive as possible, humans can communicate with Pepper through (almost) natural language, who also does the same, supported by human-like gestures, and by looking the person in the eye and following them with the gaze. It also has abilities to analyse facial expressions and voice tones, which can be used for best contextual understanding and for adapting its behavior to the situation.

While Pepper, both as a platform and individually, still have a lot to learn and their performance not always please everybody, they’re improving day by day.

What’s new with Pepper? It looks just the same. True. But you know, it’s the inside that counts!

What has changed?

The core has entirely changed. The way how Pepper is programmed, in terms of programming languages, IDE and APIs and has in every respect changed. Some abilities have changed: a few are new while others have been improved, like the interactive experience and the speech recognition. All in all, certainly a lot has changed.

Let’s talk about the Operating System

As announced at the Google I/O 2016, and finally released end of June this year, Android is now powering Pepper.

The humanoid robot incorporates a tablet on its chest. In previous versions, this tablet was used as a display, to support and enrich the interaction in both ways, and was fully controlled by the „head“ or main processor. Now, the tablet acquires a major role, being in control of the whole body from its applications. While the head continues to run the proprietary NaoQi OS (based on linux) as it always has, it now receives the instructions from the focused app running on the tablet, GMS (Google Market Suite) certified, and currently running Android 6.0 on its quad-core ARM. It is therefore programmed with Android, using Java or Kotlin as for mobile apps to control the whole robot by means of a service in the background communicating both. At the same time, this also means applications for Pepper based on the old SDKs (python, C++) will no longer be supported after upgrading the OS of the robot to 2.9.

Having said that, because of the position of Android in the mobile OS market and the size of its community, this promising architectural change opens plenty of new ways and possibilities besides solving previous issues of the platform. It aims to target a broader number of potential developers and likewise intends to promote the development of software for the robots, enabling a smooth combination of robot-intrinsic actions with the power of any other available services and libraries, for instance deep-learning based capabilities and integration of NLP (Natural Language Processing) tools to build more complex applications.

Moreover, the resource management has greatly improved, giving the developer high control of the robot resources in use at any given time. This way, conflicts and undesired behaviour caused by several actions trying to run simultaneously on the same resources, previously a recurring issue, are now prevented.

If the tablet is controlling the robot, what happens with the microphones and speakers? With regard to the audio resources, these have been unified: the tablet redirects its audio output to the head to be outputted through the robot speakers and the head redirects its audio input to the tablet to be processed.

Another noteworthy fact, related to security: it has finally been addressed seriously, solving known severe vulnerabilities of the platform such as unauthenticated remote control or privilege escalation. This is a decisive step to increment trust in their robots, partially lost after the release some months ago of several security assessments showing these kind of vulnerabilities.

Let’s talk about the IDE

Pepper will no longer be programmed using Softbank Robotics‘ tool „Choregraphe“. Instead, Android Studio comes into play. Thanks to a Plugin, which allows a seamless integration of Pepper-specific tools in Android Studio, the development, testing and debugging experience is much more comfortable than it used to be.

These include tools already known to those familiar with Pepper development, such as a virtual robot emulating both the tablet and the body, a robot browser to be able to connect to your Peppers via wifi and an animation editor, among others.

Particularly the testing and debugging parts of the development process of a Pepper application used to be kind of tricky so far, having the software often distributed in several software components, namely the logic in python, a dialog based on a QiChat topic, part of a Choregraphe behavior and javascript code running on websites shown on the tablet, all of them communicating asynchronously. This pain is now gone, as all components of the app belong together and so can they be comfortably tested and debugged on Android Studio both manually or using automated testing. (yay!)

Let’s get started

In the following, a quick overview of the necessary steps to build an app for the humanoid robot:

- Install Android Studio and the Android SDK

- Get Pepper SDK Plugin for Android Studio

- Install the Qi SDK

- Create a new project in Android Studio

- Create a robot application

For the full Getting Started guide, visit the official documentation.

After understanding how the robot lifecycle works, in parallel to the Android Activity Lifecycle, you can have your Android app control the robot using the SDK. Keep in mind that any mobile app will run on Pepper’s tablet (whether it makes sense is another story) but this doesn’t work both ways, meaning robot apps will only run on a real robot and on the robot simulator.

Let’s talk about the SDK

The SDK provides mechanisms to enforce safety of robot control and scoped execution i.e. to control the robot, an application requires the ‚focus‘, which can be owned by just one app at a time, that must be running in the foreground. Once the focus has been gained, it is also guaranteed that no other app or background services will be able to interrupt the control of the robot.

The API is conveniently built using a consistent usage pattern across all features, as well as a unified start and stop pattern and error handling, which makes its use quite intuitive.

Regarding multitasking and asynchronicity, for most of the actions sync and async flavors of every method are provided, as well as chaining operations mechanisms.

The actions of the current API (v3, released on 29th of June 2018) are grouped into the following 5 categories:

- Perceptions: to control the focus on humans and retrieve characteristics such as the human face picture, the estimated age and gender, the estimated basic emotion of the human (based on facial features, voice intonation, touch and speech semantics), the excitement state (based on voice and movements), the attention state (based on the head orientation, and the gaze direction) and the engagement intention state (based on trajectory, speed, head orientation). It is also possible to make Pepper focus on a particular human and manually control which human must be engaged following a specific engagement strategy.

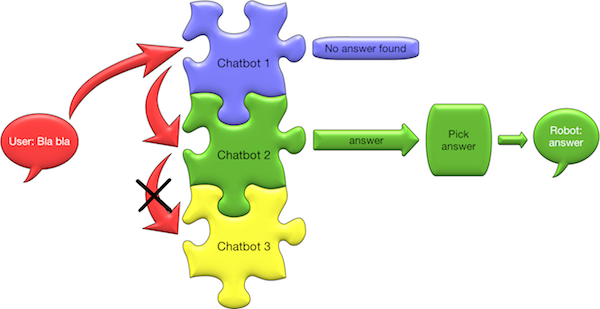

- Conversation: to make the robot chat with humans by interrogating one or several chatbots, either based on QiChat or using any other framework e.g.Dialogflow, Botfuel or Microsoft Bot Framework. The use of multiple chatbots is now supported since, although most people intuitively think that the robot can answer to anything, no chatbot can actually understand and give a good answer for every possible user input. Chaining several chatbots (see picture below) that perform well in their domain should be a better approach than trying to make a unique chatbot answer to everything and re-develop every time already existing content such as Wikipedia, weather and so on.

- Motion: to control everything related to movement, not only moving around actions (trying to safely reach a target location) but also animations (execute a series of movements with the limbs and the head, or follow a predefined trajectory), localization and mapping actions, based on SLAM (Simultaneous Localization And Mapping).

- Knowledge: this module provides a knowledge base and an inference engine to build a knowledge-based system. The knowledge service handles a graph-based database, where each knowledge graph is composed of nodes, grouped by triplets.

- Autonomous Abilities: to control the subtle robot background movements (aka. idle movements) and blink occurring autonomously in the background, in absence of interaction or other actions to be run.

Let’s see some (Java) Code

To get an idea of how the APIs look like, find here some snippets of code for basic actions. Note that the Qi context is necessary for most of the robot actions, and is gained by the focused activity when receiving the robot focus.

-

- Run an animation (series of movements, programmed using a timeline editor to control the actuators and saved as a resource):

-

-

12345678910111213141516171819202122232425// Create an animation objectFuture<Animation> animation = AnimationBuilder.with(qiContext).withResources(animationResourceId).buildAsync();// Chain the composition of the animate actionanimation.andThenCompose(animation1 -> {Future<Animate> animate = AnimateBuilder.with(qiContext).withAnimation(animation1).buildAsync();// Add a consumer to the action execution and run the action asynchronouslyanimate.andThenConsume(animate1 -> animate1.async().run());return animate;});

-

-

- Start a chat (conversation action) based on a scripted QiChatbot and an extern Chatbot:

- A QiChatbot uses a topic or list of topics written in QiChat Language and utilises both embedded speech recognition (works even offline) and remote speech recognition, which provides considerably better results and is necessary if you want to be able to recognize „free text“, i.e. text not defined in advance in the script, but requires internet access.

- On the other side, DialogFlow (formerly Api.ai) owned by Google provides a natural language user interface using machine learning. The self-implemented DialogFlowChatbot class from this example extends the class BaseChatbot from the SDK, and overrides its methods to apply the desired logic, query the cloud service and implement the reply reactions. This can be used for any other chatbot. The conversational interface must in this case be designed through their console in the browser.

-

12345678910111213141516171819202122232425262728293031// Create a topicTopic mainTopic = with(qiContext).withResource(localTopicResourceId).build();// Create QiChatbotQiChatbot qichatbot = QiChatbotBuilder.with(qiContext).withTopics(mainTopic).build();// Create DialogFlow chatbotDialogflowChatbot dialogFlowChatbot = new DialogflowChatbot(qiContext, DialogFlowConfig.ACCESS_TOKEN// Create the Chat action.Chat chat = ChatBuilder.with(qiContext).withChatbot(qichatbot, dialogFlowChatbot).build();// Set Chat listenerschat.addOnStartedListener(() -> {// Do something when the chat is started});chat.addOnHeardListener(phrase -> i("The robot heard the following phrase: %s", phrase.getText()));...// Run the Chat action asynchronouslychatFuture = chat.async().run();

-

- Run an animation (series of movements, programmed using a timeline editor to control the actuators and saved as a resource):

Let’s see Pepper in action!

See one of our Peppers in action at our Cologne office.

[youtube https://www.youtube.com/watch?v=Ic0AM0_4Ftg&w=560&h=315]

In a social robot, the interaction with humans plays a crucial role. For those of you who already knew Pepper, in this video you will appreciate how the interaction has become much more intuitive for the user just by providing better feedback about when the robot is listening or not and showing what it has understood, together with the improvement of the speech recognition engine. You can also see that by integrating and combining powerful services and sources of knowledge a lot of new use-cases for the humanoid robot become possible.

Conclusion

In this blog post I tried to illustrate the current development status of Pepper the robot and all recent major changes in the platform. Despite the hurdle of migration, having to fully rewrite old code and APIs that are still in the process of getting stable, we see the platform is developing in the right direction, evolving rapidly and offering new and attractive features when it comes to build specific software for the social humanoid robot.

There is, however, still a lot to do so stay tuned to learn about further development of Pepper and get in touch if you have ideas you would like to implement with Pepper!

Hi Silvia, how did you manage to update your pepper to version 2.9? We just got a new Pepper and I can’t find any information about this version. Can you help me?