Notice:

This post is older than 5 years – the content might be outdated.

Before I got more familiar with Microsoft Azure and all its PaaS components such as Azure Analysis Services, I was routinely sticking to Microsoft’s on-premises BI stack. With this constrained view on technologies, also some restrictions in terms of cloud-based technology re-interpretations came along.

What actual scenario do I want to point out?

Once, we all were cloud virgins… imagine the following situation: you have your SQL Server Data Warehouse on-premises with regular relational tables and you are using a Tabular Model on top of it. You are building fancy dashboards and hosting them on your Power BI Report Server or you have them running in the PowerBI service. Doesn’t sound new to you, right!?

Now you can do this sort of stuff in Azure. It’s the Modern Data Warehouse approach (for more details on MDWH check this out). “I know!“ …most would say by now. However, what I have experienced in most customer contexts, is that people are aware of this rigid, classical approach using a relational component, either Azure SQL (managed instance) or Azure SQL Data Warehouse, on top of their data lakes with subsequently attaching an Azure Analysis Services Instance hosting some Tabular Models on top of it.

This is what such a typical MDWH cloud architecture could look like:

Is there maybe something we don’t see?

There are many good reasons to use relational components, such as SQL DW for instance, especially for big workloads, considering scalability, reliability, as well as available skillsets in the SQL context. Another reason is to have an infrastructure that helps you delegate tasks to dedicated teams in the context of ETL or data reliability and consistency. I really do not want to understate the importance of one accurate and trustworthy source of truth for BI solutions.

On the other hand, we may also face scenarios where we do not need to store data historically (SCD) or where our master data gets fully loaded each day according to given prerequisites by source systems. A big data context could also be legit, where we may not have the need for a classical data modeling approach. Maybe we want to visualize a massive amount of data. For such scenarios, a relational component between data lake and Azure Analysis Services may be causing overhead.

So why not lift our file-based data directly to Azure Analysis Services!?

You may ask yourself, how can this be done?! Is it even possible? In the following sections, I want to briefly outline how, indeed, this can be achieved.

How to feed Azure Analysis Services directly from Azure Blob Storage or Azure Data Lake Store

Assuming we have a nice set of files available in our storage, we can start pretty straightforward. The good news is, we have all required connectors available in the current version of SQL Server Data Tools (SSDT) for Tabular projects.

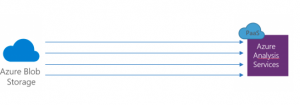

This has some straightforward implications on our possible architecture, which could simply look like the following:

However, you have to watch out how files are recognized by the storage connectors. Files in a particular storage location are not recognized as single tables. All contents of a blob storage or a particular Data Lake Store represent a tabular structure:

Now if you want to create tabular models out of your files you have multiple approaches to do so. Either you accomplish the task programmatically by using Tabular Object Model (TOM) or you choose to work with SSDT, in particular, the Power Query Editor and the Advanced Editor.

In this blog post, I want to sketch the approach via SSDT and the Power Query Editor. However, please note that you should have the May 2017 Release of SSDT in order to be able to perform the approaches briefly presented in this blog post. Compatibility Mode 1400 is of major importance!

In general, if we talk about large-scale tabular models, we can think of an Azure hosted model of 400 GB cache size. Thanks to the VertiPaq Engine we can achieve a maximum of 10x compression rates so that we could think of loading terabytes of file data in our model.

There are three major aspects which you need to take into consideration when working with this file-based approach in Azure Analysis Services:

1. Work with subsets of the data

If we consider working with terabytes of data in sets of multiple bigger and smaller files, we, in most cases, will not have the resources to load all that data in an instance of Azure Analysis Services, even though SSDT would also try to load all the data into your workspace server. There may be downsides on the storage level but also on the processing side. Therefore, it is advisable working with a representable subset of your data and later on during deployment switching the source of your data to the original files.

2. Keep data management and performance requirements in mind

A possible big data scenario would include loading a vast number of files of all different size ranges in a Tabular Model 1400. In this context we face a few challenges:

- Handle metadata correctly (table names, headers, etc. as possible source files may be lacking in terms of metadata)

- Combine files so that all data needed is together

- Find a way to process these amounts of data efficiently

How can we overcome these challenges?

The first step is to create a source query for our desired starting table by using the Query Builder in SSDT. In the next step, we can combine the remaining files for the table. During these steps, the Query Builder automatically creates some expressions. However, especially in the context of expressions you need to be careful because by adding new tables (based on files), the Query Builder will add more and more expressions. So, along the way to your final Tabular Model, you will definitely need to do some cleaning to avoid clutter. Try relying on global functions to do the work for you. By doing so you would then also include the handling of the necessary metadata like object names and headers. Technically this is all handled in M functions. But for the purpose of giving you a rough idea I am not digging into the details.

Let’s assume we have loaded our (small) required tables into SSDT and finished designing the model. Now is the time to deploy it on Azure!

3. Parallelized incremental loads on the deployed Tabular Model

Once the model is deployed, we are switching to SQL Server Management Studio. SSMS allows us to directly connect to our Tabular Model. It enables us to perform several operations against our deployed model, such as incremental data loads for efficient data processing.

For incremental data loads, we need to create partitions in the tables within AAS. Partitions in the AAS context will not improve query performance, but they will definitely improve the processing time as they facilitate parallelism in terms of processing. The MS Analysis Services Product Team performed a pretty impressive test in this context. They have managed to load 1 TB of TPC-DS (Decision Support Benchmarking Data) in less than 12 hrs by using partitions on their S9 AAS (28 parallelly running partitions). In contrast a single (no) partition full load takes more than 21 hrs.

What are factors that determine the processing speed?

As the Analysis Services Product Team also stated, excessive partitioning will not produce noteworthy gains, however, more sophisticated data sources will definitely help. And now we are talking about components like SQL DW again. They were able to load the entire 1 TB data set into SQL DW via PolyBase within 2 hrs and transfer the data to AAS within 9 hrs.

Summary

At the end of the day use cases define which technology setup makes the most sense, but despite the commonly known approaches, we have seen that Azure Analysis Services is able to consume data from a variety of sources including storages like Azure Blob Storage or Azure Data Lake Store. You can find a very detailed instruction on how to re-build this solution in several blog posts by the Microsoft Azure Analysis Services Product Team:

- https://blogs.msdn.microsoft.com/analysisservices/2017/05/15/building-an-azure-analysis-services-model-on-top-of-azure-blob-storage-part-1/

- https://blogs.msdn.microsoft.com/analysisservices/2017/05/30/building-an-azure-analysis-services-model-on-top-of-azure-blob-storage-part-2/

- https://blogs.msdn.microsoft.com/analysisservices/2017/06/22/building-an-azure-analysis-services-model-on-top-of-azure-blob-storage-part-3/

If you have questions to this kind of setup or if you would like to try this out, feel free to contact us. Besides that, thanks to the Analysis Services Product Team for their efforts in sharing their knowledge! ??

Read On

Find out more about our analytics services, including Microsoft BI, app and web development on our website. If you’re looking for a job in this field head over to our current job offers!

What are the options in case I want to use Azure stack only for heavy data processing but at the end, still want to do reporting (let’s say a Power BI) on an On prem tabular instance. Does Azure support that?

Hi Jagbir, while processing an SSAS tabular model the actual Azure Analysis Services (AAS) instance is performing the heavy workload, including loading new data, recreating relationships, calculating calculated columns, recreating hierarchies, etc. After the processing is done the „data“ is cached in the model via VertiPaq, now transferring that model on to an on prem Analysis Services (AS) instance would mean to transfer data from the RAM of the AAS instance to your local instance’s RAM, which is not possible in this context. If you consider a hybrid setup with workload shifting in mind, you would usecase-specifically try to let the work be done by your data processing engine and your data lake by excluding unnecessary data and aggregating where possible, so that workload on your on premise AS instance is reduced.

Cheers,

Eli

Is it possible to use multiple data sources for SSAS tabular simultaneously. For instance read dimensions from SQL Server Tables and Fact tables from BLOB storage (files)?