In this blog post we want to give you an overview of how we implemented a proof of concept (PoC) on smart meeting rooms, what we plan to do next and how Azure did or did not help us along the way.

This article serves as an overview for the planned blog post series with more in-depth information on topics like the sensor/device setup, our integration of machine learning, and the experiences we had with the Azure platform.

First There Was Lack of Space, then Covid-19

The meeting rooms at our offices had usually been in high demand and were not available for spontaneous use. Sometimes a meeting room was available on another floor, but one did not even bother to check its status. Solving those challenges is what we had in mind when starting this project.

With the impact of Covid-19 however, a lack of available meeting rooms is not really an issue anymore as we shifted to full remote work (quite effortlessly if we may say so). Nonetheless, other considerations became really important for those still dependent on working at the office:

- How long has a particular room been empty?

- How recently has a room been thoroughly ventilated?

- How many people are recommended to be in a room at the same time?

Without knowing about Covid-19 when we began working on the PoC in 2019, we were capable of addressing those questions with our developments so far. Let’s see what we have built.

The Framework We Gave Ourselves

The idea of a smart building, including things like

- meeting rooms that recommend deleting calendar events if no one is present,

- parking spots that notify commuters when they become available, and

- coffee machines that report their lack of milk or beans to the person on duty,

is not new. Our specialists for embedded devices were particularly interested in combining automated approaches to IoT computing with modern cloud-based services for centralized management. We believe that before we recommend an IoT setup to our customers, we should be confident that the proposed solution actually works. So we wanted to put the Azure IoT suite to the test. Whenever possible, we wanted to use Azure services for our requirements while still keeping open-source projects in mind. The result is a mix of exciting technologies that we want to share with you in this blog post series.

Architectural Overview

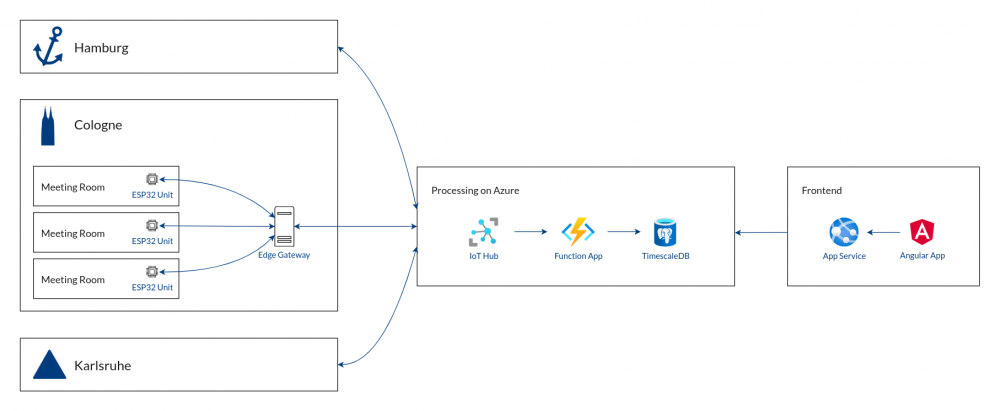

We split this post into four parts: sensors, intermediate communication, cloud processing and front-end parts. They can be pictured like this, from left to right:

We start in a single meeting room, then look at one location, move on to Azure and finish with our front-end product.

Basic Units: ESP32 and Sensors

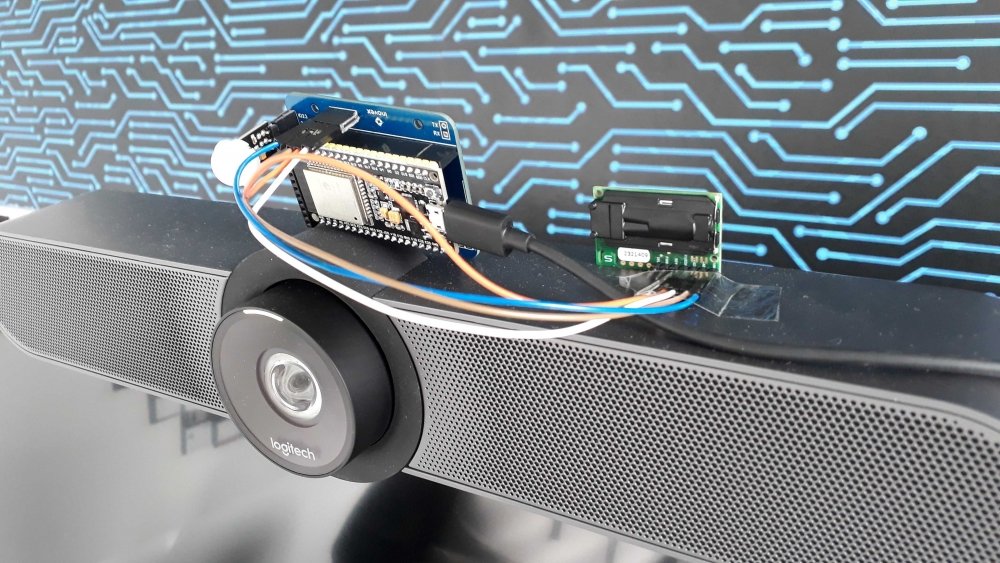

As you could see in the architecture overview, all rooms that are supposed to be tracked by our service get a base unit. This unit consists of an ESP32 micro-controller, which is equipped with different sensors:

- A presence sensor that reacts to movement

- A combined CO₂ / humidity / temperature sensor

We developed the firmware on the ESP32 specifically for our needs and we use OTA (over the air) update functionality to be able to upgrade them when we implement new features.

We designed and 3D-printed a case for the controller and sensor unit ourselves. It will sit conveniently on top of the TV screens most of our meeting rooms have installed. And yes, we thought about the heat from the screen and that it might mess with our temperature measurements. The case design will take this into account and should prevent faulty reports.

The WiFi-capable micro-controller enables us to communicate with the outside world. We only need power via cord-based connection. The setup is as simple as mounting the whole box to the TV and plugging it in. The micro controller connects to the WiFi and registers itself as a new device. It does not know which meeting room it was placed in, however. Let’s see how we fix that.

Intermediate Communication

In the near future, we want to introduce one edge gateway per location that serves as a central intermediary between the controllers and the Azure IoT Hub. The IoT hub supports both direct and indirect communication via multiple protocols, so a dual setup would also work. The edge gateway can analyze, pre-filter and aggregate sensor messages.

We use Mender to manage our gateways and whenever a new device registers itself via a module running on every gateway, it is linked to the micro-controller’s room. As soon as that is done, the sensor knows its location and will report it in its messages.

To set up new gateway devices, we use our custom-built gateway provisioning service which allows an automatic deployment. We just plug in a new device, authorize it with one click and wait for the deployment to finish. The service registers the new edge gateway with Mender and Azure IoT Hub and also fetches a device-specific certificate from our Vault server.

Mender allows us to push firmware updates whenever new features, bug fixes or changes are added to the existing code base. The process is mostly automated:

- Firmware roll-outs are manually initiated via Mender

- Gateways continuously check for available updates

- They download the latest firmware

- They install it to a separate partition

- They restart themselves, booting from the new partition

With this setup, updates are relatively stress-free and keep the downtime low. In case you are wondering: we operate the Mender software in an Azure Kubernetes cluster which is the recommended way for a production system.

Processing on Azure

After the edge gateway or the device sends messages to the IoT hub (either via MQTT or HTTPS), there are a few options on how to proceed depending on your needs. We went for the EventHub endpoint provided by the IoT hub to further process the messages. This allows for fast response times – you could call it our “hot“ path. Before that, we also maintained a cold path by writing the same data to Blob Storage. However, we soon noticed that the dual processing was not necessary for our needs, the hot path sufficed.

The EventHub endpoint triggers an Azure Function every time data arrives (buffering is possible, then the messages are handled in batches). The function unpacks the JSON data of the messages and saves the fields we need into a TimescaleDB. The database is a Managed PostgreSQL, hosted – you guessed it – on Azure. We used to operate a CosmosDB instance, as it was (of course) recommended by Microsoft. After a few weeks however, we noticed that the cost-benefit ratio was not what we hoped for. CosmosDB was far too expensive for our budget. Even though the storage solution via PostgreSQL is not as natively integrated with Azure Functions as CosmosDB, it works just fine for us. And it costs far less.

After this step, the data is sufficiently prepared for usage by our front-end setup.

Getting the Information Out There

We developed a simple ASP.NET application that connects to the database and provides a Restful API. It allows access to locations, rooms, sensors and sensor data. We also integrated some Google APIs, e. g. to read calendar data from our rooms, so we could provide more useful information. This application is packaged in a container that runs on an Azure Web App. Our first version of the app was not containerized, but we quickly switched after Kudu deployments were not really getting us anywhere.

The API is consumed by an Angular application that our colleagues can browse. The overview for one of our rooms looks like this:

Every room is represented by a tile that displays a certain color: orange means the room is occupied, green means it is available. You can see that our „Tron“ room currently reports a pleasant 23 degrees Celsius.

Both the Angular as well as the ASP.NET application are protected by Active Directory authentication. This makes it particularly easy to maintain our user base, as all employees are already listed in our company’s Azure subscription. As the Angular app is just a bunch of JavaScript, HTML and CSS files once compiled, we simply use Azure Blob Storage to host it publicly – no need for a web server.

The Future

As you can see, we still have some items left from the list of initial ideas. Laying the groundwork (for future applications like an intelligent parking lot or a smart coffee machine) by building the smart meeting room infrastructure was challenging, fun, and we learned a lot. With the integral parts ready, we hope to be able to onboard new areas faster.

Following this overview blog post will be a series of articles that each go in-depth on certain topics that we briefly glanced at here, like our sensor/device setup and testing, our integration of machine learning, and more information on experiences we had with the Azure platform. Leave a comment to let us know what areas are of interest to you!