Content based image retrieval is a field in computer vision. The aim is to find the most similar images to a given input image, where the similarity refers to the semantic content of the images. A problem occurs when the applied method delivers irrelevant results and the content of the recommended images is not similar to the content in the input image. In these cases user interaction can be utilized to suggest more relevant images. In this blog post, I want to present my master thesis, which focuses on improving the results of image retrieval by interacting with the user.

Image Retrieval

Before developing user feedback methods, we have to look at image retrieval methods. A popular way to implement image retrieval is by using deep metric learning. The aim of which is to compare the similarity of objects by measuring the difference between them. This is done by using a neural network to transform the objects into feature vectors. The difference between feature vectors can be measured by the distance between them. The applied transformation aims to reduce the distance between objects of the same class and increases the distance between objects of different classes. In the resulting feature space the similarity can be measured by using a standard distance metric like the euclidean distance or cosine distance.

To use deep metric learning for image retrieval a dataset containing images is necessary. These images are used to train a CNN that transforms the images into feature vectors in order to compare them afterwards. In the training process these feature vectors are compared in a loss function. This loss function tries to decrease the distance between feature vectors from images of the same class and increase the distance between feature vectors from images of different classes. The weights of the CNN are adjusted according to the loss function by using an optimization algorithm like stochastic gradient descent.

After training, a user can insert an input image into the trained CNN. The feature vector of the input image is being compared to the ones of the dataset. The ones with the least distance to the feature vector of the input image are presented to the user.

To implement content based image retrieval I used the method of Multi-Similarity Loss [1]. As a CNN I used an InceptionV3 network which was pre-trained on the ImageNet dataset. This setup delivers a benchmark output that is comparable to state of the art in content based image retrieval.

Dataset

I chose the Caltech-UCSD Birds-200-2011 (CUB) dataset [2] to be able to implement an image retrieval method. It contains 11,788 images of 200 different bird species and the images are taken in different poses and environments like flying in the air, swimming in the water or walking on land. The dataset was often used as a benchmark in recent literature. The images in the CUB dataset are made with decent lighting and have favourable image quality. To create a setting where the image retrieval algorithm struggles to recommend relevant images, I reduced the quality of the input image.

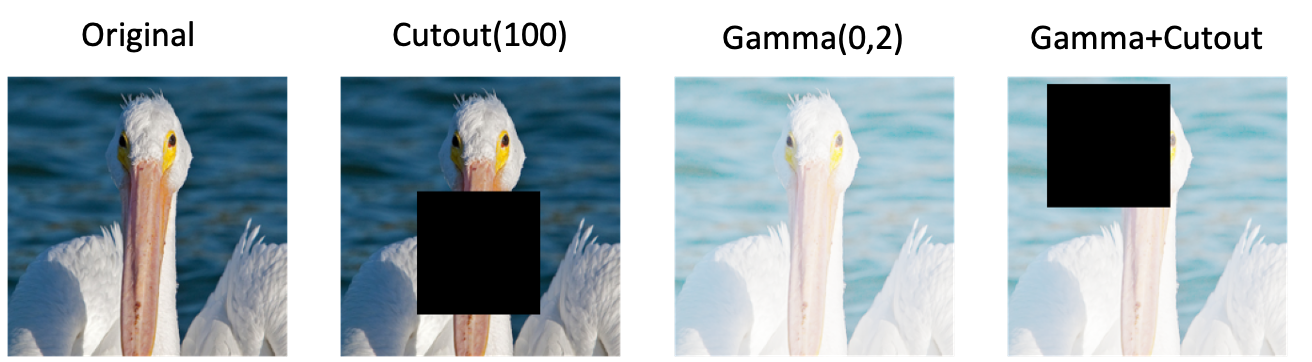

To achieve that, two methods of image manipulation were applied. The results are illustrated in figure 1. The leftmost image is the original image from the CUB dataset. Cutout [3] was applied in the image next to it. In this method, a 100×100 pixels-sized square overlays a random part of the image. This simulates a scenario where part of the birds is covered by scenery. The other method is gamma correction where the RGB values of every pixel in the image are increased/decreased. This lets the image appear with more/less lighting. In the most right image both methods were applied.

Methods

With the described image retrieval method and the manipulated images we now want to develop feedback methods. For that, there are two resources available: the feature vectors of the images in our dataset which resulted from the image retrieval process and the feedback of the user that consists of a relevant/irrelevant label for every recommended image.

First of all we take a look at the baseline method which works without user feedback. It performs a nearest-neighbor search on the feature vector of the image retrieval process. In each feedback iteration the images with the feature vectors which have the least distance to the feature vector of the input image are recommended to the user. Images that are already presented to the user are excluded from the search of new recommendations. The distance between feature vectors ist measured in cosine distance.

The first two feedback methods are based on a binary classification. After the user classified the recommended images as relevant/irrelevant, the respective feature vectors are used to train a classification model. This model classifies incoming feature vectors to distinguish if they belong to the relevant or irrelevant class. For this task two different models were used to compare the performance. In one implementation a support vector machine algorithm (SVM) with a rbf Kernel was used. In the other implementation a multilayer perceptron (MLP) with three hidden layers and a binary cross-entropy loss function was applied. After the training, the classification models process the feature vectors of the image retrieval process. The images belonging to the feature vectors which have the highest probability of being relevant are recommended to the user.

The third method takes a different approach by transforming the feature vectors a second time after it has been already done in the image retrieval process. In order to do this, an MLP takes feature vectors as an input and creates new feature vectors as an output. The reason why these resulting feature vectors are special is that cosine distance between relevant feature vectors should be as small as possible and the distance between relevant and irrelevant ones should be as high as possible. To create these conditions, triplet loss [4] was used as a loss function to adjust the weights of the CNN in the training process. After the training process of the model, the feature vectors from the image retrieval process and the feature vector of the input image are processed by the MLP. The resulting feature vector from the input image is compared to the resulting feature vectors from the rest of the dataset. The images of the feature vectors with the smallest cosine distance to the feature vector of the input image are recommended to the user. In the implementation I used a MLP with three hidden layers and a 48-dimensional output layer. The workflow of this method is illustrated in figure 2.

Evaluation

In the evaluation I compared the methods described above with the help of the CUB dataset. In order to compare these I used the Recall@K [5] metric. It indicates how many recommendation iterations contain at least one relevant image. Recall@K is a binary metric that takes either the value 1 or 0. If K recommendations of a feedback iteration contain no relevant image, it takes value 0. If it contains one or more relevant images, it takes the value 1. In other words, this metric shows how many feedback iterations deliver relevant recommendations. To examine the performance of the four described methods on the CUB dataset, Recall@8 was used. The results are illustrated in figure 3.

In the evaluation 15 feedback iterations were performed and for every iteration the mean value of all Recall@8 values of every image retrieval in an iteration was calculated. The results show that the values of the baseline method are declining slowly. Better results are visible for the SVM Method. The values are rising until the eighth iteration and peak at 0.8. In contrast, the values of the MLP method drop after iteration 0. After that they climb up and score reasonable results which are below the ones of the SVM method. The drop in the first iteration can be explained by the small amount of labeled images that are available. A MLP is a complex model that needs some data to be trained properly. In the first iterations there are not enough labeled images available.

Noticeable is the performance of the triplet method. First of all the used dataset to test the triplet method was approximated, because of a long execution time. Even though the dataset was approximated a clear trend is visible. After the iteration 0, the values drop from 0.42 to 0.05 and then keep on that level. This shows that the triplet method does not work in this context. The reason for that is that the architecture with an MLP trained with triplet loss is very complex and needs a lot of data to be trained appropriately. This is even more the case for the triplet method than for the MLP method.

The evaluation shows the problem: we have high dimensional feature vectors resulting from the image retrieval process. It takes complex models to compare these feature vectors. But for training complex models often a lot of training data is needed. In this context only eight labeled images per iteration are available – which is not a lot. So a chosen method should be complex but be able to work with a small amount of training data.

Conclusion

To summarize: As a starting point I used Multi-Similarity Loss with the CUB dataset. Because I wanted to create a scenario where user feedback is actually needed, image manipulation is applied. On the base of this setup I created three feedback methods. The SVM method is based on a binary classification with a support vector machine. This method performed the best in the tests. The second-best performance showed the MLP method. Again this is based in a binary classification. But this time it is using a multilayer perceptron. The triplet method achieved the worst results. It transforms the results of the image retrieval process to split relevant and irrelevant images. The outcome shows the problem of feedback methods. A model is needed which describes the complex relations between feature vectors and is also able to work without a lot of training data.

In future work other algorithms for feedback methods could be tested on this setup. As there are a lot of other ways to implement a feedback method, there is possibly room for improvement by other methods. Also the created methods can be used under a different setup. This means a real user can evaluate the recommendations or a real dataset can be used, which is not from literature and doesn’t contain manipulated images. This possibly leads to other problems and unconvers problems that were not visible with the setup in my scenario.

References

[1] Wang et al. – 2019 – Multi-Similarity Loss with General Pair Weighting for Deep Metric Learning

[2] Welinder et al. – 2011 The Caltech-UCSD Birds-200-2011 Dataset

[3] De Vries, Taylor – 2017 Improved Regularization of Convolutional Neural Networks with Cutout

[4] Schroff et al. – 2015 FaceNet: A Unified Embedding for Face Recognition and Clustering

[5] Yuan, Liu, 2015 Product tree quantization for approximate nearest neighborsearch