Wouldn’t it be nice if we could mimic the productive cloud environment on our local machine to speed up development and simplify debugging? This post explains how to set up PyCharm Professional to use a local Docker container as a remote interpreter that mirrors the behavior of your production environment.

Motivation

Especially in the cloud context there are several managed services available to easily build and scale Docker based APIs or batch processing jobs such as ECS (Elastic Container Service), AWS Batch and Fargate for AWS. The problem with this services is that we have long development and debug cycles as we often need to redeploy our whole setup to see the effect of our changes. Also, debugging becomes quite a hard job if you cannot set any breakpoints to look into the current state of more complex scripts or even own packages. To speed things up and be able to dig inside specific behavior, it would be nice to mimic the productive environment in a local setup as close as possible.

As all this services are based on containers, we might come up with the idea to build and run our corresponding Docker images locally. This is already much faster but still not fast enough, as we need to rebuild the container after every change.

To move even faster we have come up with the idea to mount our working directory into our container and install local packages in interactive mode (as we are talking about Python „pip install -e“ will do the job). That way we can immediately run our changes and see the result.

This is still it is a bit messy, as we need to start our scripts from the command line and we have no possibility to use the strong features of our IDE such as debugging.

Luckily this is something the developers of many IDEs also realized and they recently started to provide options to use remote interpreters that run e.g. in a Docker container.

Setup

In this post we will have a look on how to develop containerized Python code with PyCharm Professional. The main reason for this choice is that PyCharm Professional provides a very easy and understandable interface for using Docker as a remote interpreter. Next to PyCharm there are also other IDEs that provide such a functionality. One other IDE that provides similar options is VSCode and there are probably many more. It is worth mentioning that not only Docker can be used as a remote interpreter but also Virtual Environments (PyCharm Community Edition), WSL (VSCode and PyCharm Professional) and SSH (VSCode and PyCharm Professional). The SSH remote interpreter is also extremely useful in the cloud context, for example if you want to develop Spark code on a remote cluster (EMR in AWS). Using the WSL remote interpreter is a descent choice for developing with a Windows PC in Linux based environment (e.g. in the AWS context).

Setup requirements other than PyCharm Professional is only a working Docker Client (in this example on a Windows machine).

Configuration

Docker Client Configuration

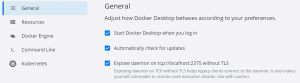

In the Docker settings it is important to enable the “Expose daemon on tcp://localhost:2375 without TLS“ option in the general settings. The local docker client will not be recognized by PyCharm otherwise. Also, it is required to share your local volume (Resources -> File Sharing), where your code and local data is located.

Building the Container

The first thing you need to run on a remote Docker interpreter is a local Docker image. You should build this from the same Dockerfile you use for your productive environment. Note that it also makes sense to define the required python dependencies for your container in a setup.py. This will also give you the possibility to install the packages you need for debugging/testing but not in production using the “extra_require“ option (something like PyTest).

To build your local container go to your favorite terminal and type

docker build -t <name:tag> <path to the folder containing the Dockerfile>

which will create a local Docker image for you. You can verify that your local image exists by typing

docker image ls

which will list you the local images on your machine. Note that if you did not specify a tag it will be “latest“.

Configure a Remote Interpreter in PyCharm

Once you have build your local image, open the PyCharm IDE and go to File -> Settings… Open the Project Interpreter settings. Click on the wheel on the right and then on the Add… option.

In the context that opens up, choose Docker on the left hand site. Make sure LocalDocker is used as the Server and choose your Docker Image under Image name. For the Python interpreter path, choose python (or python3).

Type OK and Apply and that is it. Congrats, you just configured a remote interpreter. In the Project Interpreter panel you can see and upgrade the installed python packages inside the container. However, I would recommend to rebuild the image when changing your dependencies. This ensures that development image and production image do not diverge.

Possible Usage

Run/Debug

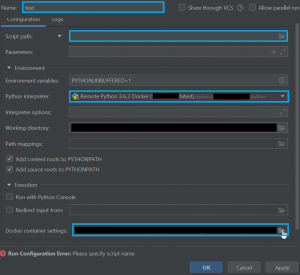

Now, how to use the remote interpreter: On the Run/Debug toolbar, left to the run button click the Edit Configurations… option in the drop-down menu.

Add a new configuration using the plus sign and select the Python template.

After giving it a proper name, change the script path to the Python script you want to run. Make sure your remote interpreter is selected as Python interpreter.

Click on the folder symbol on the Docker container settings option. You will see that your project directory is already mounted inside the container, so you have access to your whole project code. There is also an option to define environmental variables. This is extremely useful as it provides an option to mimic an environment the container would have in the cloud. This could be an S3 location of some data, endpoints of required APIs and so on. Press OK and leave the configuration menu.

Now, with your configuration selected, you can press the run or debug button and your code will start to run inside a newly spawned local container. As PyCharm uses a fresh container instance on each run, all changes inside the code will be taken into account. If the code is started using the debug button, it will stop at every breakpoint and provide the usual debugging options.

Testing

Another way to use the remote interpreter is for PyTests. Usually PyCharm will already set the remote interpreter as default interpreter once it was configured. So it will also be used if a PyTest is triggered via the IDE. If you want to use a specific interpreter with additional options for a specific test, just click Edit Configurations… again and and generate a new entry – this time using the PyTest template. Select the test as target, make sure the desired interpreter is selected and configure the Docker container settings as you require them to be.

Testing inside a Docker container is especially useful, as we can set breakpoints inside PyTests to have a closer look why they are failing. Note that it might be necessary to add --no-cov to the Additional Arguments in your PyTest configuration to make your remote interpreter stop at the breakpoints (this is a common bug).

What About Data?

In certain cases it is necessary to provide data to see if something works as expected. For the production environment we would usually provide a URL to the required data (e.g. S3 in the AWS context) by an environmental variable. In our local container we want to mimic this behavior. The easiest way is to set the same environmental variable in our local configuration and load the real data every time we need it. If we also provide the probably required credentials using environmental variables, the docker container can download the data inside the container image during runtime (with e.g. the boto3 API).

However, production data is often large, so we do not want to download it every time we run our script. In addition, we might want to use only a fraction of the real data for testing purposes.

Accordingly, it would be nice if we could run our code inside the same container with small local data for development and large cloud data in production. As local and remote URLs behave differently we will use a nice python library for this called fsspec.

This library provides an abstract file interface that can decide during runtime whether an URL belongs to a local file system or a remote cloud URL. Thereby we only need to change the values of our environmental variables to local file URLs and mount the corresponding data inside the container.

This way, we get a fast way to analyze the behavior of real data in our code, which can be lifesaving when projects grow more complex. This also helps a lot to trace down errors we get in out productive environment in reasonable time. Using data can also help to provide simple integration tests that run locally.

APIs

Remote interpreters also provide a nice way to debug APIs. Here, they are configured by setting the script path to the file that starts the API client. In the Docker container settings add a port binding from the APIs container port to the host port on localhost. After doing so you can start, or debug, the API locally by pressing the run button. To test specific requests just fire some them to the localhost port, e.g. using postman. If you set a breakpoint inside a request definition, the debugger will stop here during the request and provide insights to the behavior of different requests inside the API.