The term data quality is generally used to describe the degree to which data corresponds to the real things or facts it represents. As it is often difficult or impossible in practice to assess the quality of data based on this definition, it is usually estimated by evaluating the deviation from predefined assumptions. The assumptions originate from the specific domain and are to be identified and recorded first, e.g. “The measured temperature is always between -10°C and +50°C due to the technical limitations of the sensor“. Assumptions can refer to the semantic or syntactic correctness, as well as the up-to-dateness or completeness of a data set. In addition to the specification of assumptions, ensuring data quality also involves continuous updating and regular validation, as well as the implementation of a process for handling anomalies. A detailed introduction to data quality can be found here.

Soda as a Tool for Ensuring Data Quality

To use Soda to ensure data quality, you can choose between Soda Core and Soda Cloud. While Soda Core is free to use, Soda Cloud is a subscription-based SaaS offering that can be interacted with via the Soda Library. The range of functions and support for external systems differ significantly between Soda Core and Soda Cloud. With the Soda Checks Language (SodaCL), Soda offers a domain-specific language based on YAML that can be used to define assumptions about data, among other things. More than 25 metrics* are already provided for this purpose, which can be used to define assumptions about data types, missing values, data set size, and much more. This low-code approach allows Soda to be used without extensive programming knowledge and decouples the assumptions of a specific data set from the generic validation process.

In cases where these metrics are not sufficient, user-defined checks can be implemented based on SQL. Soda Checks are defined using the YAML format and provide the basis for validations (Soda Scans). With alert levels and fail conditions* SodaCL offers additional options for implementing more complex strategies to handle anomalies. Last but not least, Soda offers a wide range of integration options*, e.g. to common databases and communication systems. In addition to common cloud data warehouses such as Snowflake, BigQuery, and Redshift, relational database systems such as PostgreSQL, MySQL, and Microsoft SQL Server are also supported. In addition, Soda offers integration options* for dbt and Spark as well as for Slack, Jira, and GitHub.

* Limited functionality in the Soda Core OS version compared to Soda Cloud

Soda Core, Soda Cloud, and Soda Library

While the open-source version of Soda Core is limited to ad-hoc analyses, Soda Cloud and the associated Soda Library allow analysis results to be stored in the cloud and made permanently available for retrieval. To comply with data protection regulations, you can choose between the two regions of the EU and the USA for storage.

- Soda Cloud Dashboard

With Soda Cloud comes the Soda Cloud Dashboard, which allows for easy tracking of your historic data quality checks. In addition to monitoring in the Soda Cloud Dashboard, Soda Cloud also enables the implementation of more complex data quality checks based on a history of previous runs. Some examples of complex checks that are only available in Soda Cloud include:

- Reconciliation checks: comparison of multiple data sets for equality or similarity, e.g. after a data migration.

- Evolution of data distributions: comparison with historical data distributions using hypothesis tests

- Anomaly detection: detection of unusual data using historical metrics

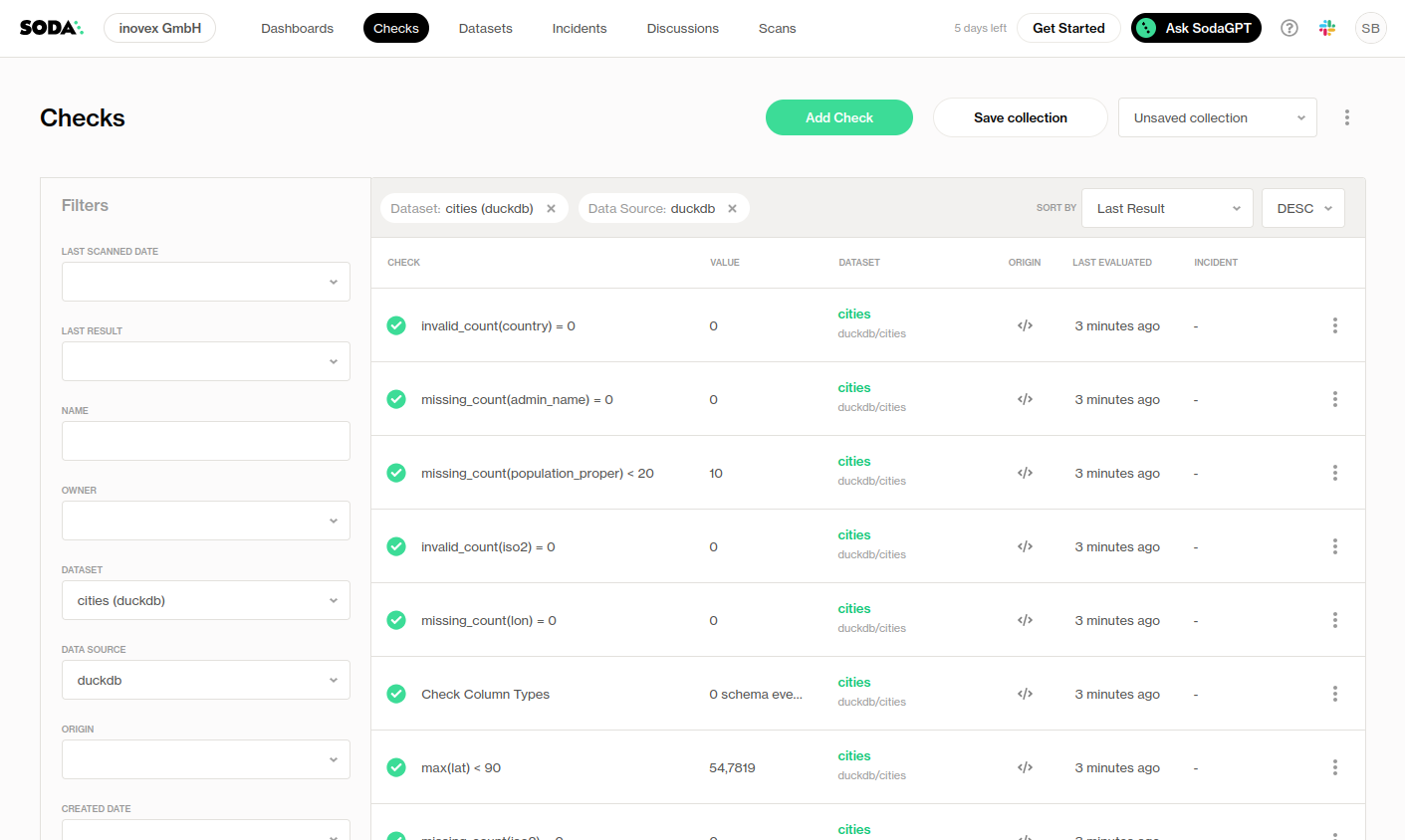

- Soda Cloud Checks

To identify the cause of failed checks, Soda Library can send a sample of the affected data records to Soda Cloud for viewing and analysis. However, it should be noted that potentially sensitive data may be transferred to Soda Cloud.

- Soda Cloud Datasets

Soda Cloud offers insights into your data quality check on different levels (e.g. overall data quality state, datasets, and individual checks) which allows for a good overview.

Soda-hosted and self-hosted Agents

With Soda Agents, it is possible to run scheduled and regular data quality checks. A Soda Agent includes a complete installation of the Soda Library, is ready to run, and can be managed in Soda Cloud. The user can choose between two variants:

The Soda-hosted agent that can be provided with just a few clicks is operated in the Soda Cloud and can only be used on publicly accessible databases (e.g. MySQL, PostgreSQL, Snowflake, and BigQuery are supported). Credentials for the data sources must be stored in the Soda Cloud. Using the Soda-hosted agent variant is very easy, as Soda takes care of the infrastructure and operations, i.e. setup, maintenance, and scaling of the agent. The costs for this variant are calculated by usage (i.e. pay-per-use).

On the other hand, self-hosted agents offer the option of operating them within your own data infrastructure, with all data sources being supported. In this case, credentials and data sources can also remain in your infrastructure and do not need to be stored in the Soda Cloud. The advantage of the self-hosted agent variant is that you have more control over the operations and database credentials and that you can adapt it flexibly to your own needs. The disadvantage is the effort and costs involved in operating it yourself.

Demo: Ensuring Data Quality with Soda

In the following, the Soda Checks Language (SodaCL) is used to define assumptions in the form of soda checks, which are then validated with data scans. As a preparation for this demo, a local instance of PostgreSQL was used, which was populated with data from the DVD Rental PostgreSQL database.

Installation and Configuration of Soda Core

First, the necessary Python packages for the respective data source need to be installed. To integrate PostgreSQL, it is therefore required to install the Python package soda-core-postgres as follows:

|

1 |

pip install soda-core-postgres |

Furthermore, Soda requires a configuration to ensure database connectivity, depending on the data source. This is created in YAML format and looks as follows in our case:

|

1 2 3 4 5 6 7 8 9 |

data_source my_local_db: type: postgres connection: host: localhost port: '5432' username: user password: myPassword database: dvdrental schema: public |

Optionally, to establish a connection with Soda Cloud, an API key and a secret must be provided in the configuration, which can be generated in the Soda Cloud account under Your Avatar > Profile > API Keys.

|

1 2 3 4 |

soda_cloud: host: cloud.soda.io api_key_id: <your_api_key> api_key_secret: <your_api_key_secret> |

To check the configuration and the connection to the data source, Soda CLI offers the following command:

|

1 |

soda test-connection -d my_local_db -c config.yml |

Creation and Execution of Soda Checks

Similar to the configuration files defined above for the database connection, YAML configuration files are also used for the soda checks.

Value Checks

In the data model of the DVD Rental data set, the actor table contains information about movie actors. In addition to the ID of each actor, the table also includes the first and last names of the actors, as well as a metadata column last_update with the last update of the corresponding rows.

We start by defining checks to verify the presence of the first name and surname in each record:

|

1 2 3 |

checks for actor: - missing_count(first_name) = 0 - missing_count(last_name) = 0 |

We can soften the requirement that not a single first name may be missing by making use of the alert levels warn and fail:

|

1 2 3 4 5 6 |

checks for actor: # check for missing columns - missing_count(first_name): warn: when between 1 and 10 fail: when > 10 - missing_count(last_name) = 0 |

If the number of missing first names in the actor table is greater than 0, but less than or equal to 10, this now only appears as a warning in the validation result. The validation itself returns a positive result in such a case. Only if there are more than 10 first names missing in the actor table, the validation would fail.

In the table customer, we can find the column email, which contains the email addresses of the customers. In the following, we define a check to examine whether a unique email address in a valid format has been provided for each customer:

|

1 2 3 4 |

checks for customer: - duplicate_count(email) = 0 - invalid_count(email) = 0: valid format:email |

By default, the check is carried out for all records in the customer table. If only a subset of the data is to be checked, this can be implemented by using a filter configuration either for a single check (in-check filter) or an entire table (dataset-filter). In the following, a check is defined analogously to the example above to check the email column. However, only active customers are taken into account here, which are identified by the value 1 in the active column:

|

1 2 3 4 5 6 |

checks for customer: - duplicate_count(email) = 0 filter: active = 1 - invalid_count(email) = 0: valid format:email filter: active = 1 |

Here you can see that in-check filters are defined for each check and cannot be reused. Dataset filters, on the other hand, are defined per table and can be reused in several checks. The same check can be implemented as a dataset filter as follows:

|

1 2 3 4 5 6 7 |

filter customer [active_only]: where: active = 1 checks for customer [active_only]: - invalid_count(email) = 0: valid format: email - duplicate_count(email) = 0 |

An advantage of this is that you only have to make changes to the filter condition in one central location and these changes will affect all associated checks.

Custom Checks

You can also define your custom checks in addition to the existing soda checks. Custom checks use SQL queries to evaluate a user-defined metric. In the following, a custom check is created for the rental table to check whether the rental date is before the return date:

|

1 2 3 4 5 6 7 8 9 10 11 |

checks for rental: - invalid_dates = 0: invalid_dates query: | SELECT COUNT(*) AS invalid_dates FROM rental WHERE rental_date > return_date; # Optional: if connection to Soda Cloud defined failed rows query: | SELECT * FROM rental WHERE rental_date > return_date; |

One disadvantage of custom checks is that they do not support dataset filters at the moment. In the example above, a failed rows query was defined in addition to the custom check, with which invalid data records can be sent to Soda Cloud to view them there.

Schema Checks

In addition to checking table values, table schemas can also be checked in Soda. Schema checks are particularly useful at the beginning of ETL pipelines to check the presence and data types of critical columns. A schema check for the actor table could look like this:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

checks for actor: - schema: name: Confirm that required columns are present and of the correct type fail: when required column missing: - actor_id - first_name - last_name when wrong column type: actor_id: integer first_name: character varying last_name: character varying warn: when required column missing: - last_update when wrong column type: last_update: timestamp without time zone |

Similar to the previous example, the two alert levels fail and warn are used to differentiate between the severity of the different violations: If actor_id, first_name or last_name are missing or the data type of these columns is incorrect, Soda recognizes this as an error and returns a negative result. If the last_update column does not exist or the format is incorrect, Soda only issues a warning.

If you want to execute the previously defined checks, the following Soda CLI command can be used:

|

1 |

soda scan checks.yml -d my_database -c config.yml |

A successful scan returns the following result:

|

1 2 3 4 5 6 7 8 9 10 |

Scan summary: 5/5 checks PASSED: actor in my_database Confirm that required columns are present and with correct type [PASSED] missing_count(last_name) = 0 [PASSED] missing_count(first_name) = 0 [PASSED] customer [active_only] in my_database invalid_count(email) = 0 [PASSED] duplicate_count(email) = 0 [PASSED] All is good. No failures. No warnings. No errors. |

In case there is a duplicate in the email addresses of the customer table, the check for duplicates would return a negative result. The scan result in the CLI would be as follows:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

Scan summary: 4/5 checks PASSED: actor in my_database Confirm that required columns are present and with correct type [PASSED] missing_count(last_name) = 0 [PASSED] missing_count(first_name) = 0 [PASSED] customer [active_only] in my_database invalid_count(email) = 0 [PASSED] 1/5 checks FAILED: customer [active_only] in my_database duplicate_count(email) = 0 [FAILED] check_value: 1 Oops! 1 failures. 0 warnings. 0 errors. 4 pass. |

Programmatic Scans with Python

Data validation can also be done programmatically using soda scans with Python. The functionality of the Python library is similar to that of the CLI.

Configure and Execute Scan Object

In the following example, a soda scan is created and then executed. We configure the scan using existing YAML files in this case. It would also be possible to configure it directly in the code using YAML strings.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

from soda.scan import Scan scan = Scan() # Set scan definition name, equivalent to CLI -s option scan.set_scan_definition_name("soda_demo") # Add config files scan.add_configuration_yaml_file(file_path="./config.yml") scan.add_sodacl_yaml_file("./checks.yml") # select data source from config scan.set_data_source_name("my_local_db") # Execute the scan exit_code = scan.execute() print("Exit code:", exit_code) # Set logs to verbose mode, equivalent to CLI -V option scan.set_verbose(True) # Print results of scan print(scan.get_logs_text()) |

Save and Process Scan Results

We now use the scan object to analyze the result of the validation. Using the functions of the scan object, we can react to the presence of errors and warnings:

|

1 2 3 4 5 6 7 8 9 10 11 |

# inspect the scan results print(scan.get_scan_results()) # react in case of failures if scan.has_check_fails(): print("some checks failed!") # react in case of failures or warnings if scan.has_checks_warn_or_fail(): for check in scan.get_checks_warn_or_fail(): print(check.get_dict()) |

If you access the individual results of the checks via get_checks_warn_or_fail, specific information such as totalRowCount (i.e. the number of invalid records) can be viewed for custom checks, which cannot be viewed via the log. By default, individual records that have not passed the check can only be viewed in the Soda Cloud. However, in order to be able to view these records without Soda Cloud, it is possible to implement a CustomSampler class that adapts the standard sampler.

Conclusion

Soda offers extensive options for ensuring data quality, both in terms of the range of checks and the supported backends. Particularly worth highlighting is the intuitive usage concept, which stands out positively in comparison to its competitor, Great Expectations. The framework already appears to be quite mature, although the open-source version Soda Core is limited to the core functionality, as the name suggests. To implement more complex mechanisms for ensuring data quality without major implementation effort, it is a good idea to use the SaaS version Soda Cloud. This also offers built-in dashboards to monitor the results of validations, including their history.

At the moment, the pricing for using Soda Cloud is not yet publicly available but is subject to individual offers. When using SaaS offerings, special caution is required when regarding data protection, especially when processing personal data. For example, the transfer of personal data to third-party providers must be contractually regulated and requires the consent of the data subjects.