Can we agree that designing, implementing and operating distributed systems is difficult? If you want to know what consensus has to do with distributed systems and where it is used, then this blog article is the right place to start.

Beyond the polarizing debates that dominate headlines, a glimmer of hope lies in the art of finding common ground. Consensus is one pathway that can guide a collective group of people towards collective decision-making. This is known as consensus-based decision-making.

Computer scientists have seen merit in consensus when it comes to communication between computer systems. Computers are not working in an isolated environment but have to communicate with each other over a network. In many situations, computers work together to achieve a common goal. When multiple computers need to agree on a particular sequence of bytes, consensus becomes indispensable. In this blog article, we want to dive into the world of consensus and explore where consensus is used and why it is used there.

The fundamental problem in distributed systems

Computers do not work perfectly all the time, sometimes computers will fail. A failure can cause outages and can make services or the entire software unavailable. Thus, computer scientists and practitioners had to come up with concepts and methods that could prevent faults from becoming failures.

The fundamental problem of distributed systems is to design and build reliable systems in the presence of a number of faulty processes.

If we want to think about possible failure modes, we have to talk about faults. What kind of faults can arise in computer systems? In general, we can broadly divide faults based on their origin: hardware, software, and human faults.

Hardware faults can include faulty RAMs, hard disk crashes, faulty network cables, and so on. Whatever hardware component we use in our system, can become faulty at any time.

Software faults can include bugs, faulty coupling where one service brings down another service, insufficient error handling, and many more.

But human error does not stop here: This includes misconfiguring a system, forgetting to back up crucial parts of the system, or even accidentally deleting backups.

In this blog article, we cannot talk about how to solve all of the above-mentioned problems but I want to talk with you about processes that can suffer from failures and how we can deal with them.

Process resilience

Process resilience is the art of preventing processes from failure and recovering from situations where processes fail. How can we do that? One common way is to replicate processes into groups. These groups are called homogeneous since we are only replicating processes.

The idea sounds very simple, if a process fails, then another process can take over. It is also very important to ensure that this group of processes is not leaked to external processes since process groups should act as a single logical process.

Since we talk about replication here, the state of each process needs to match the state of the other processes. If one or more processes cannot be trusted, then we need a consensus to resolve this. The participants in a process group are also called members.

Process groups can be divided into 2 classes:

- Hierarchical Groups: One process is the leader and the leader coordinates the other processes.

- Flat Groups: No process is the leader and all decisions are made collectively.

These 2 classes of groups have advantages and disadvantages. Hierarchical groups have a single point of failure, that is the leader, but you do not need to vote for every decision being made in the process group. You will only need to vote for the next leader when the previous one becomes unavailable.

A flat group has no single point of failure since there is no special process but all decisions are made collectively.

Thus, hierarchical groups are most often the preferred solution since processes will not fail very often, at least not if the design and implementation are not inherently bad. And we are not defenseless since we can appoint a new leader.

Moreover, when the leader tells its followers that they have to do something, it is common to refer to these operations as commands. A leader appoints commands to its followers and the followers will apply the commands when the leader gives the signal to do so. Replicated processes need to execute this set of commands in the same order to obtain the same result.

When we talk about Raft, we will see that Raft also uses the hierarchical group model and the notion of commands.

Consensus

The first consensus algorithm that was proposed is Paxos. Paxos was published as a technical report by Leslie Lamport (Turing Award Winner) in 1989. A decade later, Lamport published a scientific paper called “The Part-Time Parliament“.

Fun fact: The name Paxos refers to the small Greek island that is also named Paxos. Archaeologists have found something interesting on this small island, they found out that the legislators maintained consistent copies of the parliamentary records.

The story on how they replicated the records and why they did that was an inspiration for the Paxos algorithm that describes how one can reach consensus in distributed systems. We will not dive deeper into the topic of Paxos. Paxos was very important since it brought consensus to the surface but only very few people in this world understand all the details of this algorithm since it is notoriously difficult to understand. As we will see, this led to the development of other consensus algorithms.

Since Paxos is so difficult to understand in all its specifications, the implementations of Paxos also differ. But that didn’t stop researchers from extending Paxos, among other things Multi-Paxos, Cheap-Paxos, and Fast-Paxos are extending the Paxos family.

In 2014, Diego Ongaro and John Ousterhout developed a distributed consensus algorithm called Raft that should establish an alternative to Paxos that is much easier to understand. Understandability is a core idea in Raft. If you want to have a thorough read through this consensus algorithm, you should check out the extended version of the original paper.

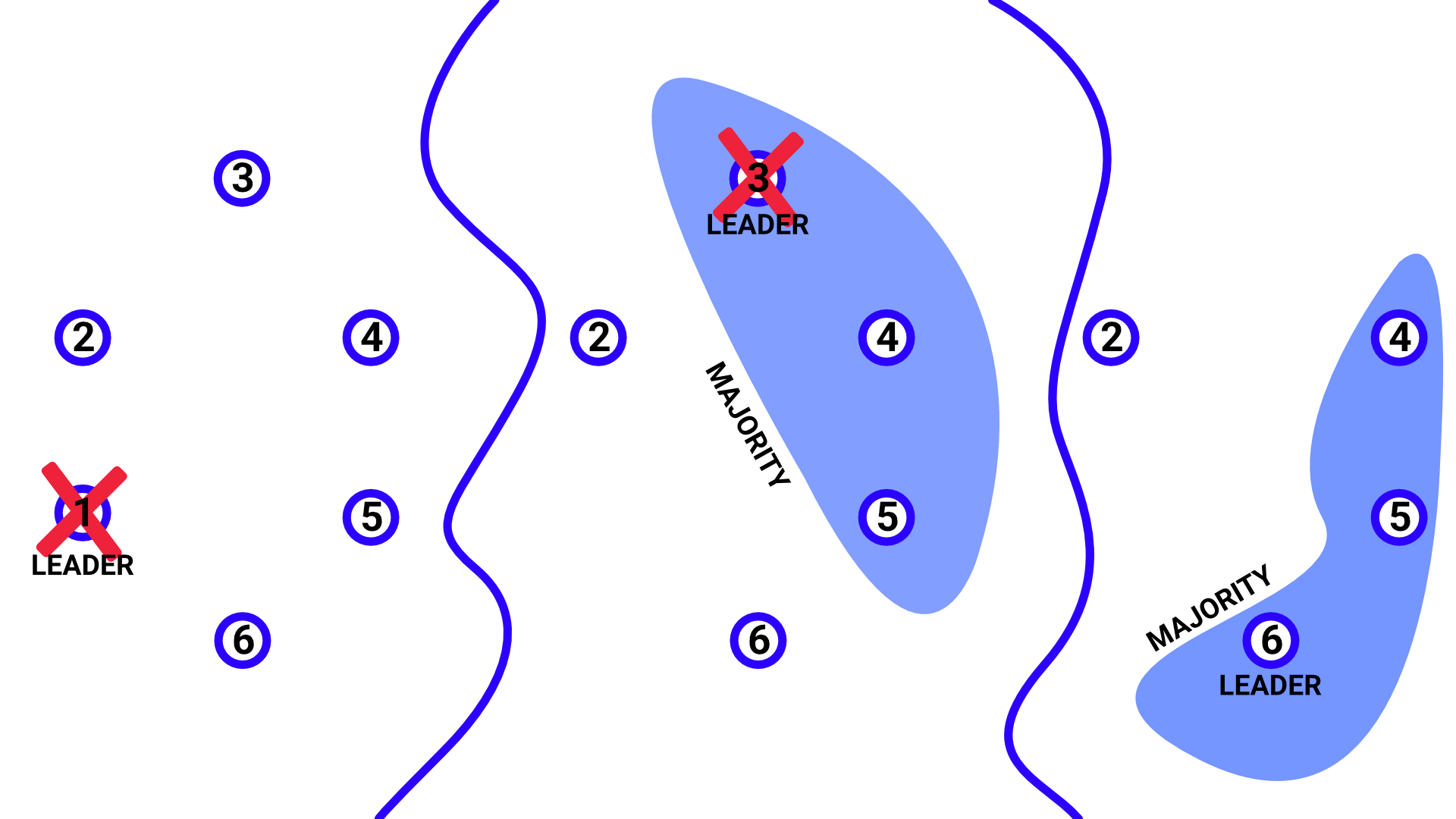

So how does Raft implement consensus? There is no better way of understanding a complex algorithm than by having a look at an example. Suppose we have three nodes that are communicating with each other. A node can be in the following 3 states: leader, follower, or candidate. In the beginning, all of these nodes will be in the follower state. We have no leader so the followers will start to transition to the candidate state such that they can become the next leader. Followers will notice when the leader is down due to the heartbeat mechanism. Normally, the leader would send a heartbeat to all of its followers, signaling that the leader is still available. But if the follower does not hear the heartbeat for a certain time, they start to become candidates. A voting quorum will occur. The candidates will vote for themselves and request votes from the other nodes. The other nodes will reply with their vote and a candidate will transition to the leader state when it gets the majority of votes. A split vote could also occur which would lead to a new term with a new voting process. Now, we have an assigned leader which will now start coordinating the other nodes. Each change will need to go through the leader and each change is appended in the node’s log. Also, Raft works with committed logs, so all changes are appended in an uncommitted log state. The follower needs to signal to the leader that they have received the changes from the leader. When the follower writes the changes into their log, they will send the leader a message that they have written to the log. The leader will commit the change and will notify the followers to also commit their changes. In principle, this is how Raft established consensus in a distributed system. This mechanism is also known as Log Replication.

For the interested reader: If you have wondered how candidates are requesting votes, then we should have a look at a real implementation of Raft. Let’s have a look at Hashicorp’s implementation of Raft in Go for that. Raft’s original paper states that Raft should offer specific RPC’s. The RPC we are interested in here is the RequestVote RPC. We can find this in the net_transport.go file with the following lines of code:

|

1 2 3 4 |

// RequestVote implements the Transport interface func (n *NetworkTransport) RequestVote(id ServerID, target ServerAddress, args *RequestVoteRequest, resp *RequestVoteResponse) error { return n.genericRPC(id target, rcp RequestVote, args, resp) } |

Hashicorp has implemented a method genericRPC that can be used to invoke RPCs. In this case, we are invoking rpcRequestVote which is the request type. Pay attention to the arguments that are being passed, we are passing a RequestVoteRequest and a RequestVoteResponse. RequestVoteResponse is a struct that can be found in the commands.go file. It looks like this:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

type RequestVoteRequest struct { RPCHeader // Provide the term and our id Term uint64 // Deprecated: use RPCHeader.Addr instead Candidate []byte // Used to ensure safety LastLogIndex uint64 LastLogTerm uint64 // Used to indicate to peers if this vote was triggered by a leadership // transfer. It is required for leadership transfer to work, because servers // wouldn't vote otherwise if they are aware of an existing leader. LeadershipTransfer bool } |

A lot is going on in this struct but if you take a look at the Raft paper, you will see that we have the following requirements for this RPC call, we should provide the following arguments: The term, the ID of the candidate, the index of the candidate’s last log entry and the term of the candidate’s last log entry. The latter two are represented by LastLogIndex and LastLogTerm. Candidate is deprecated but the RPCHeader contains the candidate’s ID and also the address of the candidate.

We also have requirements for the RequestVoteResponse. It should return the following: the current term and whether the vote is granted or not. When we take a look again at the actual implementation,

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

type RequestVoteResponse struct { RPCHeader // Newer term if leader is out of date Term uint64 // Peers is deprecated, but required by servers that only understand // protocol version 0. This is not populated in protocol version 2 // and later. Peers []byte // Is the vote granted Granted bool } |

We can see that the RequestVoteResponse contains the Granted field as well as the Term field. When a receiver gets a RequestVoteRequest, how should the receiver behave? The receiver should set Granted to false if the term is lower than the current term. A term is basically discretizing time into time intervals of arbitrary length. So we can assume that a candidate with a lower term possesses an older log version and we don’t want a leader with an older log version. A vote should be only granted when the candidate’s log is at least as up-to-date as the receiver’s log and the candidate ID that received the vote in the current term is either null or the candidate ID of the voter. If you are interested in the whole behavior, have a look at the raft.go file and the following function with the signature func (r *Raft) requestVote(rpc RPC, req *RequestVoteRequest). Okay, I hope you got an idea of how such an RPC works.

When you dive into the world of Raft or other popular consensus algorithms, you will most likely read about state machine replication. Why is that? Well, the state-machine approach is a framework that lets us understand how replication protocols can work in a faulty environment. So how does it work in Raft? Each node has a log containing a series of commands. These commands need to be executed once the log changes are committed. The state machine is responsible for executing these commands once they are committed. All of the changes in the log must be processed in sequence to guarantee that every node has the same result.

Another quite popular consensus algorithm is the ZooKeeper Atomic Broadcast Protocol (Zab). ZooKeeper guarantees that messages are delivered reliably to all nodes. Also, every message that has been sent, is delivered in order. Furthermore, ZooKeeper uses peer-to-peer (p2p) FIFO channels via TCP. TCP is useful when using FIFO channels since one can use two properties of TCP: data is delivered in the same order it is sent and no message is sent once a FIFO channel is closed.

FLP theorem of distributed systems

The FLP theorem is named after the researchers who described FLP for the first time, the researchers are Michael J. Fischer, Nancy A. Lynch, and Michael S. Paterson. The FLP theorem states the following:

No consensus protocol is correct despite one fault.

When you read this theorem for the first time, you might think that it sounds a little bit under-complex. But indeed a lot of important implications can be deduced from it. Other than that, this theorem can be proven by contradiction. You assume that a consensus protocol will work correctly at all times despite one fault. But you can then show that a consensus algorithm can lead to a situation where it is forever indecisive.

However, we should be cautious with this result since an analysis of distributed systems is very sensitive to the assumptions that are being made. Here we are dealing with an asynchronous network model where we want to reach three goals: Fault tolerance, termination, and consensus.

Thus, the researchers of the FLP theorem made the following conclusion at the end of their paper:

We have shown that a natural and important problem of fault-tolerant cooperative computing cannot be solved in an asynchronous model of computation. These results do not show that such problems cannot be “solved“ in practice;

rather, they point up the need for more refined models of distributed computing that better reflect realistic assumptions about processor and communication timings, and for less stringent requirements on the solution to such problems.

That is the reason why consensus algorithms like Raft and Zab are working with timeouts and tolerate situations where the system may hang but will not violate any of its guarantees.

Applications

We will not dive too deep into the details of the above-mentioned consensus algorithms and prefer to talk here about the applications where consensus algorithms are used to solve asynchronous communication with replication. A very popular software that uses consensus is the well-known Kafka. Kafka is a streaming technology that can store messages on partitioned disks that can be replicated for fault tolerance. Producers can add messages to the Kafka cluster and consumers can read messages from the Kafka cluster. This technology is well suited when you want to decouple your producers from consumers. So what makes the replication in Kafka possible? Kafka uses Zab as a consensus algorithm but this has one downside, ZooKeeper is a third-party dependency in Kafka and it is technically a separate system. Kafka uses ZooKeeper as a metadata store, information about partitions and brokers are stored there, also the election process has to go through the ZooKeeper ensemble. Therefore Kafka has been working on Apache Kafka Raft (KRaft) where Raft has been integrated into Kafka itself. This will enable more scalability, support for more partitions, and a faster failover. For more details, go to the KIP 500.

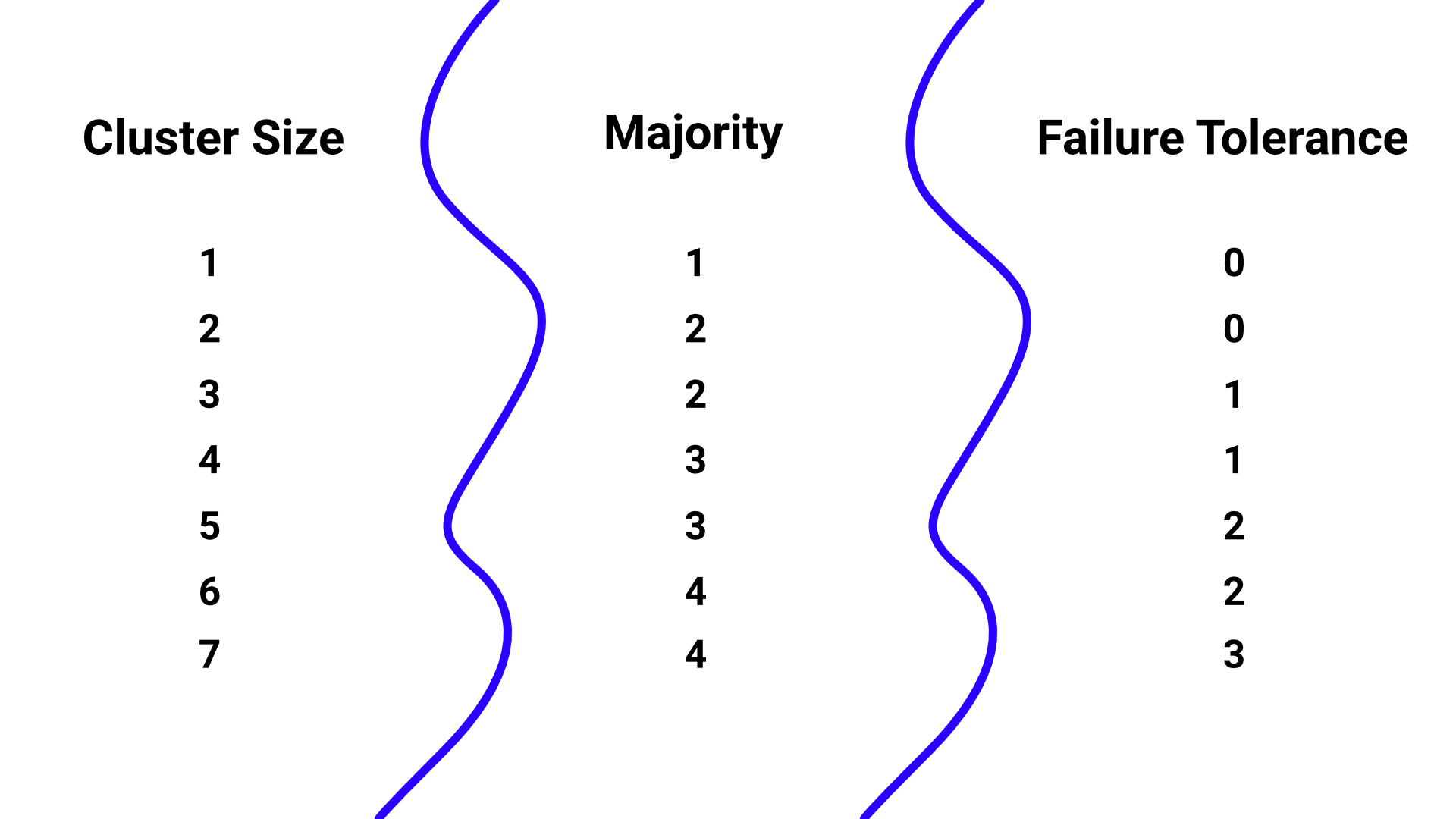

Another very important technology for us today that uses consensus is etcd. etcd stands for „distributed etc directory“ and is essentially a key-value store that is used as a coordination service in distributed systems. This component is a third-party dependency to Kubernetes that is one of the most popular technologies out there. Under the hood, etcd uses Raft. Normally, you would operate etcd as a cluster on a multi-node setup, either 3, 5, or 7 nodes. In production settings, 5 nodes are recommended since the cluster can tolerate 2 cluster members to fail. Since etcd uses Raft, the requests are processed by the leader node and only serialized read requests will be also processed by any other cluster node.

Why do you typically use either 3, 5, or 7 replicated control plane nodes?

The reason is consensus. Consensus algorithms are working with voting quorums to elect a new leader or for a quorum to agree on a particular value. Suppose we have only 2 nodes. If a voting quorum occurs and both of the nodes have different data values, then they will not reach a consensus since a third node is missing that can either agree on one or the other data value. If we have 3 nodes, then we need a majority of 2 nodes that agree on a data value, in such a cluster we can tolerate one node failing. When we have 4 nodes we can also only tolerate one node failure but 4 nodes is worse than 3 nodes since we got the same fault tolerance but we need a majority vote of 3 nodes.

Therefore, we can use 5 nodes to improve our resilience, a cluster with 5 nodes can tolerate 2 nodes failing and need a majority vote of 3 nodes. If this is not enough, we can use 7 nodes, then we will be able to tolerate 3 node failures. But you should not use more than 7 replicated control plane nodes in production since the improvement in resilience comes with a price. The price is performance degradation since every change in the cluster needs to be replicated from the leader to all followers. The more members we have in our cluster, the more messages need to be sent. Furthermore, we have more nodes that we need to maintain and configure. Thus, 5 control plane nodes in production is a good trade-off between performance and resilience.

Many people who are not into this topic often wonder why you can only tolerate 2 nodes failing when you are starting with 5 nodes. Shouldn’t it be tolerating 3 nodes failing? Since when we lose 2 nodes, we have still 3 nodes and we have learned that 3 nodes can tolerate one failure, so this would sum up to 3 nodes‘ failure tolerance. Well, this is not the whole story. When you learn about quorum-based protocols, you will learn that to guarantee write-write consistency, you will need at least floor(n/2+1) members in a voting quorum. If we plug into this formula n=5, we will get out 3. So when we start with 5 nodes and lose 2 nodes, we only have 3 members left in the cluster, so we cannot tolerate another failure since we would drop below the 3 required members. If you want to understand this, I would advise you to read Gifford’s research paper which was already published in 1979.

Final remarks

Consensus is imperative for building robust distributed systems and it does not hurt to at least know a little bit about it when working with highly distributed systems nowadays.

I hope you found this article useful! See you in the next one!