Notice:

This post is older than 5 years – the content might be outdated.

The ELK/Elastic stack is a common open source solution for collecting and analyzing log data from distributed systems. This article will show you how to run an ELK on Docker using Docker Compose. This will enable you to run ELK distributed on your docker infrastructure or test it on your local system.

To start just copy the source code below and create a file called „docker-compose.yml“ and then another one called „elasticsearch.yml“ in the same directory. These two files and a working Docker installation is all you need.

Starting up Docker Compose

The Docker compose file uses official images from the docker hub. Thus there is no need build your own images to get started. There are two different ways to launch your ELK stack from this example:

- As single instances: Get a shell in the directory where you’ve put the two files and type: docker-compose up. This command will start one container for Elasticsearch, one for Kibana, one for Logstash. The Logstash container will listen to port 8080, the Kibana container to port 80.

- With an Elasticsearch cluster of x data nodes: docker-compose scale Elasticsearch=x Kibana=1 Logstash=1. This will start an Elasticsearch cluster with x nodes, one Logstash and one Kibana instance. Logstash will again listen to port 8080, Kibana to port 80.

Sending & accessing data

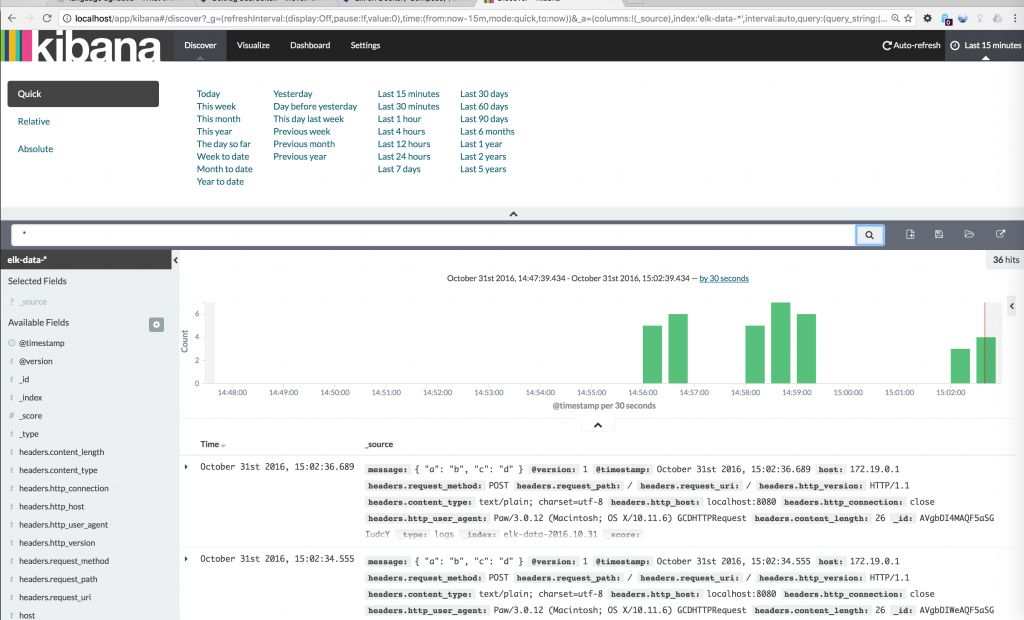

As Logstash is listening to port 8080 feeding data into the system works via a simple curl: curl -XPOST http://localhost:8080/ -d '{"a": "b", "c": "d"}'. Kibana is configured to listen to port 80. As the used Kibana image is not configured yet you will be prompted to setup an index pattern. Logstash will write its data to a daily index with the naming scheme ‚elk-data-*‘.

As Elasticsearch persists its data on local volumes the data in your cluster will be saved until the volume containers are removed.

Source Codes

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 |

version: '2' services: elasticsearch: hostname: elastic domainname: elk-local image: elasticsearch:2.4 ports: - "9200" - "9300" environment: - ES_HEAP_SIZE=1G volumes: - $PWD/elasticsearch.yml:/usr/share/elasticsearch/config - data:/data kibana: hostname: kibana domainname: elk-local image: kibana ports: - "80:5601" logstash: hostname: logstash domainname: elk-local command: logstash -e 'input { stdin { } http { port => 8080 } } output { elasticsearch { hosts => [ 'elasticsearch' ] index => "elk-data-%{+YYYY.MM.dd}" } stdout { } }' image: logstash ports: - "8080:8080" volumes: data: driver: local |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 |

cluster: name: elk_local discovery: zen: minimum_master_nodes: 1 ping: unicast: hosts: [ 'elasticsearch' ] path: logs: /var/log/elasticsearch network: host: ['0.0.0.0'] node: data: true master: true name: ${HOSTNAME} |

Example usage

As mentioned in the introduction this is an easy solution to run ELK locally on your system. Therefore it’s easy to feed local files into „your“ ELK.

Feeding local syslogs

Another use case might be to use Logstash as a local syslog server. Change line 24 in your Docker-compose file to this line:

|

1 |

command: logstash -e 'input { syslog { port => 8514 add_field => [ "received", "%{@timestamp}" ] type => syslog } http { port => 8080 } } output { if [type] == "syslog" { elasticsearch { hosts => [ 'elasticsearch' ] index => "syslog-%{+YYYY.MM.dd}" } } else { elasticsearch { hosts => [ 'elasticsearch' ] index => "elk-data-%{+YYYY.MM.dd}" } } stdout { }}' |

This will cause Logstash to listen to incoming syslog messages at port 8514. Next change your syslog config and add something like this:

|

1 |

*.* @localhost:8514 |

After you’ve reloaded your Logstash container and your syslog you’ll get an index called „syslog-*“

Get in touch

For all your Big Data needs visit our website, drop us an Email at info@inovex.de or call +49 721 619 021-0.

We’re hiring!

Looking for a change? We’re hiring Big Data Systems Engineers who have mastered the ELK stack. Apply now!