In this blog post, we’ll explore a practical example using a lunch planner app built on the Snowflake Native App Framework. This framework enables developers to package data and application logic into a shareable application, which can be distributed via the Snowflake Marketplace or privately. It also enhances the developer experience by offering an integrated testing environment, allowing applications to be easily tested with a single account after installation or updates.

What is the Snowflake Native App Framework?

With the Snowflake Native App Framework, it is possible to create actual applications that run on Snowflake and can be easily deployed within or even outside your cooperation with the Snowflake Data Cloud. The framework allows sharing data applications, i.e., data and related business logic, with other Snowflake accounts (consumers) over the Snowflake Marketplace as public or private listings. The business logic of the data application can include a Streamlit app (with UI Components for visualizations and interactions), stored procedures, and functions using the Snowpark API, Javascript, and SQL.

The Snowflake Native App Framework also supports a better experience for developers by providing an integrated testing environment where applications can be tested from a single account. Additionally, the framework supports releasing versions and patches for applications, so that developers can evolve the logic of applications and release them incrementally to customers. Furthermore, the framework supports the logging of structured and unstructured events so that it is possible to troubleshoot and monitor the applications.

Components of the Snowflake Native App Framework

In the image below, a high-level view of the Snowflake Native App Framework is visualized.

The first component visualized in the diagram is the Provider Account. Here, a provider is a Snowflake user who wants to share data and application logic with other Snowflake users (consumers).

Providers create an application package to share data and application logic with a consumer. An application package is created to encapsulate packaged data, application logic, metadata, and a setup script required by an application. It also contains information about versions and patch levels defined for the application.

To create and develop an application package, a manifest file, and a setup script are required. In the manifest file, the configuration and setup properties required by the application are defined. The setup script contains SQL statements that are executed when the consumer installs or upgrades an application or when the provider installs or upgrades an application for testing.

After the development and testing of the application package are completed, a provider can share an application with consumers by publishing a listing containing the application package. The listing can be distributed as a private or public listing on the Snowflake Marketplace. Snowflake Marketplace listings are used to share applications with many consumers simultaneously, and private listings are used to share applications directly with another specific Snowflake account.

Consumers are Snowflake users who want to access the data content and application logic shared by providers. After a listing including an application package is published by a provider, consumers can find the listing and install the application. Here, the application is installed as a database object (application object), also known as a Snowflake Native App, in the consumer account. During the installation of the Snowflake Native App, Snowflake creates the application and runs the setup script to create the required objects within the application.

Snowflake Native Apps are applications focusing mainly on data, analytics, and machine learning solutions which can operate directly with the data content within Snowflake’s Platform. These applications can be run natively within Snowflake and can take advantage of the secure, scalable and performant infrastructure of Snowflake. Additionally, they benefit also from the governance and security features of Snowflake, ensuring data privacy and compliance are maintained.

Use Case: Lunch Planner App

In this blog post, we demonstrate how we can leverage the Native App Framework to develop a lunch planner app. With this lunch planner app, a user can select recipes to create a lunch plan for a week. The app suggests recipes based on user-selected ingredients and generates recipe suggestions that the user selects until a lunch plan is completed.

Technically, our lunch planner data app is composed of three essential components, i.e,

- the data and business logic (Native App) owned by the provider side,

- the data of the future app user, owned by the consumer,

- and external API services, which we need to implement our business logic.

In this use case, the provider who shares the data content and logic of the application provides a user interface as a Streamlit app as well as ingredients data contained in the application package to the consumer. The list of ingredients is visualized in the Streamlit app for the user. From this list, ingredients to be excluded from the recipe suggestions can be selected. To get the recipe suggestions, the provider of the application enables the consumer to call an API through the user interface. In the application package, the provider also includes a specific stored procedure, which can be called by the consumer after the installation of the application. By executing the stored procedure, specific APIs in a Snowflake account of the consumer can be called.

Technical Details of the Lunch Planner App

The API that is used in this application is the Recipe API of EDAMAM, which receives a list of ingredients and returns recipe suggestions without any of these previously excluded ingredients.

The provider of the application also allows the consumers to store recipes in their own database table by providing a reference which can be used to bind a database table of the consumer to the application.

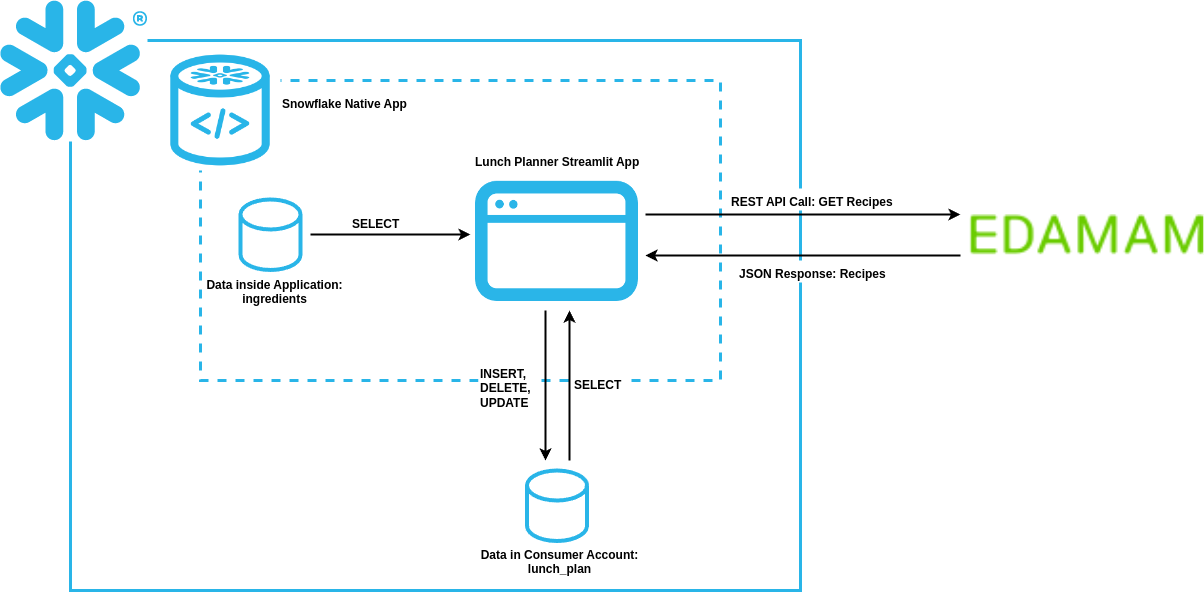

After the consumers install the application from the listing, call the stored procedure of the application to enable API calls, and bind a table of their own Snowflake account to the application, we get the data flow and structure as visualized in the image below.

The image shows the three components of the lunch planner application mentioned above. The first component (data and business logic of the provider) is the Snowflake Native App area, which contains the lunch planner Streamlit app and the ingredients data within the application package. The second component (data of the future app user owned by the consumer) is the lunch_plan table in a consumer account. Finally, the third component is the interaction between the lunch planner Streamlit app and the Recipe API of EDAMAM.

Thus, in Snowflake Native Apps, it is possible to access data that is stored in different locations. This enables developers to build applications with more flexibility.

The detailed structure of the application and the steps necessary to set up the lunch planner application are explained in the following sections.

It is important to note that if you want to follow the steps outlined here, that these can also be executed with only a single Snowflake account since the Snowflake Native Framework allows developers to test the application after installation and upgrade. Thus, it is not required to use two different accounts, since the provider and the consumer can be simulated with only one Snowflake account. Therefore, if we talk about a “consumer account“ in the following sections, we are talking about a Snowflake user who installs the app from the Snowflake Marketplace or private listing into his account, but also about the provider/developer who wants to test the installed or updated application.

App Structure & Preliminaries

The file structure of the project for the lunch planner application is presented below.

In this blog post, we will only present the parts of the files that are relevant to the components of the lunch planner app. To see the complete project, you can visit this repository.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

/blog-snowflake-native-apps /app /scripts/ setup_script.sql /src /libraries/ external_api.py init.py /streamlit/ environment.yml lunch_planner.py manifest.yml /scripts/ sf_upload.py |

Before setting up the application, some preliminary steps are required.

Set Streamlit Version of Lunch Planner App

The Snowflake Native App Framework uses version 1.22.0 if no versions are specified by the developer. For a more structured visualization in Streamlit data editor columns, e.g. replacing URLs with clickable placeholders, it is necessary to use a newer version of Streamlit. Therefore, we will use the most recent Streamlit version supported, which is 1.31.1 at the time of writing (you can find all supported Streamlit versions on the documentation site).

To add additional Python packages or to manage package versions, a file named environment.yml should be used in the Snowflake Native App. This file must be created at the same level as the main file of the Streamlit app (lunch_planner.py).

In the environment.yml file, the name and channels properties are required, and the channels property must contain the entry snowflake to indicate the Snowflake Anaconda Channel.

|

1 2 3 4 5 6 7 8 9 |

name: sf_env channels: - snowflake dependencies: - streamlit=1.31.1 |

Generate ID and Key for the API call

To call the Recipe API of EDAMAM considered in our use case, we first need to generate an application ID and key on the EDAMAM website.

Create a free account, log in, and navigate to “Accounts“ -> “EDAMAM APIs“ -> “Go to Dashboard“ -> “Application Tab“. In the “Application Tab“, you can create a new application by clicking on “Create new application“ and selecting “Recipe Search API“. After creating a new application, you can find your application ID and key by navigating through “View“ -> “Application ID, Application Keys“.

The generated application ID and key will be used to set up the component for recipe suggestions in the lunch planner app.

Uploading code files to Snowflake

For uploading the code files of the lunch planner project into your Snowflake account you can just follow the project setup instructions of the README file. Here, the sf_upload.py file ensures that the files inside the app directory of the project are uploaded into the correct stage inside the Snowflake account.

Alternatively, you can also use a repository stage to integrate the lunch planner repository with Snowflake.

Accessing Data of the Provider from the Snowflake Native App

In this section, we will explain how to access data within the application package created by the provider, which is the simplest type of data access in Snowflake Native Apps.

Initially, a schema and corresponding table need to be created inside the application package.

In the example below, you can see that a schema named shared_data is created, containing a table named ingredients with sample entries.

As already mentioned, this table will be used by the application user to select ingredients to be excluded from the recipe suggestions during the API call. The OTHER_NAME column of the table is used to store alternative names of an ingredient (comma-separated) and will also be considered for the exclusion of ingredients.

After creating the schema and the table, you need to grant privileges to share this data with the app. You can achieve this by simply granting usage privileges on the schema to share and by granting select privileges on the table to share in the application package.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

SET APP_PACKAGE = 'LUNCH_PLANNER_PACKAGE'; USE APPLICATION PACKAGE IDENTIFIER($APP_PACKAGE); CREATE SCHEMA IF NOT EXISTS shared_data; USE SCHEMA shared_data; CREATE TABLE IF NOT EXISTS ingredients (NAME VARCHAR, OTHER_NAMES VARCHAR); TRUNCATE TABLE ingredients; INSERT INTO ingredients VALUES ('Milk', ''), ('Cheese', ''), ('Egg', 'Eggs, Egg Whites, Yolk, Yolks'), ('Butter', ''); -- grant usage on the ``ingredients`` table GRANT USAGE ON SCHEMA shared_data TO SHARE IN APPLICATION PACKAGE IDENTIFIER($APP_PACKAGE); GRANT SELECT ON TABLE ingredients TO SHARE IN APPLICATION PACKAGE IDENTIFIER($APP_PACKAGE); |

After creating the data within the application package and granting privileges, a view is added that allows the consumer to access the data.

While consumers could select directly from the table, it’s better practice to use a view, as demonstrated in this application

Thus, a view with the name ingredients_view is created here in a versioned schema named code_schema. The Snowflake Native App Framework uses a versioned schema to handle different versions of schema objects like stored procedures and functions (you can find more detailed information on how versions and upgrades are managed here). After creating the view, select privilege is granted to the application role app_public.

In the code snippet below, you can see all the necessary commands to be added to the setup_script.sql file for creating the view and granting select privileges to the application role.

|

1 2 3 4 5 6 7 8 9 |

CREATE APPLICATION ROLE IF NOT EXISTS app_public; CREATE OR ALTER VERSIONED SCHEMA code_schema; GRANT USAGE ON SCHEMA code_schema TO APPLICATION ROLE app_public; CREATE VIEW IF NOT EXISTS code_schema.ingredients_view AS SELECT NAME, OTHER_NAMES FROM shared_data.ingredients; GRANT SELECT ON VIEW code_schema.ingredients_view TO APPLICATION ROLE app_public; |

After updating the application package and the application objects, the data can be accessed in the Streamlit app using the command:

|

1 |

SELECT * FROM code_schema.ingredients_view |

The data can then be visualized using Streamlit elements like dataframes or data editors (in the visualization below an additional column Exclude was added to the dataframe for selecting ingredients to be excluded when getting the recipe suggestions).

Accessing Data of the Consumer from the Snowflake Native App

In a Snowflake Native App, it is also possible to access data content in a consumer account, which exists outside the application object. To allow the Snowflake Native App to access this data content, the consumer needs to grant privileges or authorize access.

In this example lunch planner app, we will first create in a consumer account a table named lunch_plan inside the test_schema of the LUNCH_PLANNER_DB database. As already mentioned, it is not necessary to have two different Snowflake accounts, since you could also just use the provider account where the app was developed as a consumer account for testing the application. The table contains an example entry of a recipe with its name, image, and URL to the detailed description, calories, and the total cooking time needed for this recipe. The table will be used by the application user to store recipes received from the Recipes Search API call, explained in a later section.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

SET SOURCE_DATABASE = 'LUNCH_PLANNER_DB'; SET SOURCE_SCHEMA = CONCAT($SOURCE_DATABASE, '.TEST_SCHEMA'); CREATE OR REPLACE DATABASE IDENTIFIER($SOURCE_DATABASE); USE IDENTIFIER($SOURCE_DATABASE); CREATE OR REPLACE SCHEMA test_schema; USE SCHEMA IDENTIFIER($SOURCE_SCHEMA); CREATE TABLE IF NOT EXISTS lunch_plan (label VARCHAR, image VARCHAR, url VARCHAR, calories DOUBLE, totalTime INT); TRUNCATE TABLE lunch_plan; INSERT INTO lunch_plan VALUES ('Cauliflower soup', 'https://images.immediate.co.uk/production/volatile/sites/30/2020/08/cauliflower_soup-877eac5.jpg?quality=90&webp=true&resize=300,272', 'https://www.bbcgoodfood.com/recipes/cauliflower-soup', 176.0, 40); |

Now, after creating the table on the consumer side, one could assume that granting access to the table to the application object would be sufficient. However, the issue is that the app is not able to determine the name of the schema and the table (object) in the consumer account. Instead, the application package provides a stored procedure for adding references to a specific object inside the consumer account.

To achieve this behavior, a reference needs to be defined first in the manifest.yml file.

The example below defines a reference named lunch_plan_table that requires SELECT, INSERT, UPDATE, and DELETE privileges on a table in the consumer account. With the register_callback property, we define the stored procedure that will be used to bind a consumer table to the reference to make it accessible for our application. Since we are binding only one consumer object (table), the multi_valued property is set to false (to bind multiple consumer objects to the same reference, the property can be set to true).

|

1 2 3 4 5 6 7 8 9 10 11 12 |

references: - lunch_plan_table: label: "Source Table" description: "Table to be used in app" privileges: - SELECT - INSERT - UPDATE - DELETE object_type: Table multi_valued: false register_callback: code_schema.update_reference |

The stored procedure defined in the manifest.yml file needs to be created using the setup_script.sql file, which will be executed during the installation of the application. The stored procedure gets three different arguments and can be used to add, remove, or clear all references in a consumer account. The first and third arguments are used to pass a specific reference name and a reference to a specific object. These are considered during adding or removing references in the consumer account. The second argument decides whether a reference should be added or removed, or whether all references in a consumer account should be cleared. After creating the procedure, it is also necessary to grant usage privileges on the procedure to the application role.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

CREATE OR REPLACE PROCEDURE code_schema.update_reference(ref_name string, operation string, ref_or_alias string) RETURNS STRING LANGUAGE SQL AS $$ BEGIN CASE (operation) WHEN 'ADD' THEN SELECT SYSTEM$SET_REFERENCE(:ref_name, :ref_or_alias); WHEN 'REMOVE' THEN SELECT SYSTEM$REMOVE_REFERENCE(:ref_name, :ref_or_alias); WHEN 'CLEAR' THEN SELECT SYSTEM$REMOVE_ALL_REFERENCES(); ELSE RETURN 'Unknown operation: ' || operation; END CASE; RETURN 'Success'; END; $$; GRANT USAGE ON PROCEDURE code_schema.update_reference(string, string, string) TO APPLICATION ROLE app_public; |

Now, on the consumer side, the stored procedure can be used to bind the lunch_plan table created at the beginning of this section to the application.

To bind the table, the first argument should be the name of the reference, which we defined in the manifest.yml file. For the third argument, the SYSTEM$REFERENCE system function with information about the lunch_plan table can be used. This function returns a reference to an object (a table, view or function) and if SQL actions are executed on a reference to an object, the actions are performed using the role of the user who created the reference. For the second argument of the stored procedure, we are considering the ADD operator, since we want to add a reference to the consumer account.

|

1 2 |

-- Bind your data to the app --> allows app to select from table CALL code_schema.update_reference('lunch_plan_table', 'ADD', SYSTEM$REFERENCE('table', LUNCH_PLANNER_DB.TEST_SCHEMA.LUNCH_PLAN, 'PERSISTENT', 'SELECT', 'INSERT', 'UPDATE', 'DELETE')); |

After binding the lunch_plan table of the consumer account to the application, the table data can be accessed from the application by using a select command with the reference name inside the lunch planner Streamlit app:

|

1 |

SELECT * FROM reference('lunch_plan_table') |

Again, the results can be visualized using a Streamlit dataframe or data editor, as represented in the image below:

The Streamlit app of the demo Snowflake Native App also supports functionalities for inserting data into the consumer table. Since during defining references and binding the consumer table, the privileges SELECT, INSERT, UPDATE, and DELETE were also considered, the lunch planner app is also able to execute these operations.

To insert data into the consumer table, similar to the select command, the reference name can be used.

|

1 2 |

INSERT INTO reference('lunch_plan_table') VALUES ('example_label', 'example_image', 'example_url', 42.22, 42) |

In the example below, an entry for the recipe „Lamb Sirloin Roast“ was added to the lunch_plan consumer table and is visualized in the Streamlit data editor element.

For deleting entries from the consumer table, the following example command can be executed, which will delete the second entry of the table represented in the image above (in the actual lunch planner app, it is possible to select recipes to be deleted in the checkbox of the remove column of the Streamlit data editor).

|

1 |

DELETE FROM reference('lunch_plan_table') WHERE LABEL in ('Lamb Sirloin Roast') |

Accessing External APIs from the Snowflake Native App

In Snowflake, it is possible to call external APIs for accessing external data through the support of external network access functionality. This functionality can also be applied in the Snowflake Native App Framework to call external APIs within a Snowflake Native App.

Before making API calls inside a Native App, it is necessary to grant permission to the Snowflake account to access the domain of the external API. In our lunch planner app, we are using the Recipe Search API of EDAMAM, so we need to grant permission to the Snowflake consumer account to access the domain of EDAMAM.

First of all, a network rule needs to be created, which includes a list of domains of the API that need to be accessed. The EGRESS mode indicates that the network rule will be used with an external access integration to restrict outgoing requests. Thus, we need to create an external access integration that includes the created network rule in the ALLOWED_NETWORK_RULES list. Optionally, a list of ALLOWED_AUTHENTICATION_SECRETS can be passed to the external access integration. However, since our example API does not require a secret for authentication, the external access integration is created without a list of secrets.

|

1 2 3 4 5 6 7 8 9 |

-- Create external access to api CREATE OR REPLACE NETWORK RULE edamam_apis_network_rule MODE = EGRESS TYPE = HOST_PORT VALUE_LIST = ('api.edamam.com'); CREATE OR REPLACE EXTERNAL ACCESS INTEGRATION edamam_external_access_integration ALLOWED_NETWORK_RULES = (edamam_apis_network_rule) ENABLED = true; |

On the provider side, the developer of the application needs to implement the Python function init_app to initialize the Python function get_random_recipes used for the external API call.

Here, the init_app function just sets the EXTERNAL_ACCESS_INTEGRATION parameter of the external API function (if you also created a secret, you can additionally set the SECRETS parameter of the API function). Setting the EXTERNAL_ACCESS_INTEGRATION parameter of the API function is necessary to give access to the external API domain.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 |

from snowflake.snowpark import Session def init_app(session: Session, config: dict) -> str: """ Initializes function API endpoints with access to the secret and API integration. Args: session (Session): An active session object for authentication and communication. config (Any): The configuration settings for the connector. Returns: str: A status message indicating the result of the provisioning process. """ external_access_integration_name = config['external_access_integration_name'] alter_function_sql = f''' ALTER FUNCTION code_schema.get_random_recipes(string) SET EXTERNAL_ACCESS_INTEGRATIONS = ({external_access_integration_name})''' session.sql(alter_function_sql).collect() return 'Snowflake translation app initialized' |

Then we need the Python function get_random_recipes for executing the Recipe Search API for getting recipe suggestions.

The function receives as a parameter a string containing ingredients that should be excluded from the recipe suggestions. Different ingredients are separated with a comma in the string, and the API will return only recipes that do not contain any of the ingredients. After calling the API with the required parameter, a random list of recipes is returned as a response, and the Python function returns the API response text.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 |

import requests BASE_URL = "https://api.edamam.com/api/recipes/v2" APP_ID = " APP_KEY = " FIELD_LIST = ["label", "image", "url", "calories", "totalTime"] def get_random_recipes(excluded: str) -> str: excluded_list = excluded.split(", ") params = { "type": "public", "app_id": APP_ID, "app_key": APP_KEY, "excluded": excluded_list, "random": "true", "field": FIELD_LIST } headers = { "accept": "application/json", "Accept-Language": "en" } response_txt = requests.get(BASE_URL, params=params, headers=headers).text return response_txt |

Then, we need to include the definition of the init_app stored procedure and the get_random_recipes function inside the setup_script.sql file by mentioning the Python file and the function where the procedure or the function was implemented. After including the definitions, we also need to grant usage privileges on the stored procedure and the function to the defined application role (so that the stored procedure and the function can be called inside the consumer account).

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

CREATE OR REPLACE PROCEDURE code_schema.init_app(config variant) RETURNS string LANGUAGE python runtime_version = '3.10' packages = ('snowflake-snowpark-python', 'requests', 'simplejson') imports = ('/src/libraries/init.py') handler = 'init.init_app'; GRANT USAGE ON PROCEDURE code_schema.init_app(variant) TO APPLICATION ROLE app_public; -- Add an external python module CREATE or REPLACE FUNCTION code_schema.get_random_recipes(excluded STRING) RETURNS STRING LANGUAGE PYTHON RUNTIME_VERSION=3.10 PACKAGES = ('requests') IMPORTS = ('/src/libraries/external_api.py') HANDLER='external_api.get_random_recipes'; GRANT USAGE ON FUNCTION code_schema.get_random_recipes(STRING) TO APPLICATION ROLE app_public; |

In the consumer account, we also need to grant usage on the external access integration to the installed application.

|

1 2 3 |

SET APP_NAME = 'LUNCH_PLANNER_APP'; GRANT USAGE ON INTEGRATION edamam_external_access_integration TO APPLICATION IDENTIFIER($APP_NAME); |

Now the init_app stored procedure needs to be called inside the consumer account by passing the created external access integration as an argument so that the external access integration parameter of the API function is correctly set.

|

1 2 3 4 |

-- Initialize app procedure to allow some functions external access to external APIs CALL code_schema.init_app(PARSE_JSON('{ "external_access_integration_name": "edamam_external_access_integration", }')); |

Finally, the Recipe Search API function can be called by using the following command with some example ingredients to be excluded inside the lunch planner Steamlit app:

|

1 |

SELECT lunch_planner_app.code_schema.get_random_recipes('Egg, Butter, Eggs, Egg Whites, Yolk, Yolks') |

The response text of the API call returned as JSON can be converted into a Pandas dataframe in the lunch planner Streamlit App and can be visualized in a Streamlit dataframe or data editor.

The image below shows example entries of a response converted into a Pandas dataframe and visualized in the Streamlit data editor:

Publishing our Lunch Planner Native App

Publishing an application on the Snowflake Marketplace involves a few essential steps to ensure your app is available and easily accessible by consumers. First, you need to prepare and test your application package to make sure it is working correctly. Secondly, you need to set up roles and privileges properly within your Snowflake account. The role that attaches a data product to a listing and publishes the listing must be the same role that created and owns the application package.

After you have completed the prerequisites to publish a listing for an application package, you need to set the default release directive for the application package using SQL. Additionally, if you want to publish a listing for an application package to an account outside of your organization, your application package needs to pass an automated security scan.

Next, decide whether you want to create a private listing for specific consumers or a public listing available to anyone in the Snowflake Marketplace. For a private listing, you’ll create the listing in Snowsight, select specific consumers to share it with, and configure any necessary auto-fulfillment settings if the consumers are in different regions.

For a public listing, it is additionally required to create a provider profile with complete information about your company. If you haven’t created a provider profile for your account yet, it is possible to submit it at the creation of the listing for your application package. After navigating Snowsight to Data Products -> Provider Studio and selecting the Listing tab, you will see the window as displayed in the image below. To submit your provider profile, you just need to click on the “submit a provider profile“ text and complete all required information about your company. Then, you need to follow a process similar to the private listing. The only difference is that you need to make the listing visible to the entire Marketplace and to set up a pricing plan if you want to monetize the app.

After creating your listing, you’ll need to submit it for approval in case it should be public. Snowflake will review the listing to ensure it meets its standards, and you’ll receive a notification as soon as it is approved or if any changes are required. Once approved, you can publish the listing on the Marketplace, making your application available to a broader audience.

Finally, in the case of public listings, you can create a referral link to promote your app directly to potential users. Following these steps will ensure that your application is published successfully and that it is accessible in the Snowflake Marketplace.

Conclusion

In this blog post, we presented a practical use case with a lunch planner app based on the Snowflake Native App Framework. With the Snowflake Native App Framework, developers can create an application package to encapsulate data content and application logic and share it with other Snowflake accounts through the Snowflake Marketplace or a private listing. The Framework also offers a better experience for developers by providing an integrated testing environment where applications can be tested with a single account after installing or updating applications.

With our lunch planner demo application, we demonstrated how Snowflake Native Apps can access data content from different locations. This way, you can access data inside the application package of the Snowflake Native App or to access data of a consumer account outside the application package. Furthermore, Snowflake Native Apps supports accessing external data through API calls.

By combining these data access methods, we can create complex applications like the lunch planner app. This app helps users plan meals by accessing ingredients and recipe suggestions, and it stores recipes in a database table within an individual Snowflake account.

Finally, you can use the instructions in the blog post and the demo lunch planner app repository as a starting point for building your application based on the Snowflake Native App Framework and sharing it with other Snowflake users.

References and useful links

- About the Snowflake Native App Framework: https://docs.snowflake.com/en/developer-guide/native-apps/native-apps-about

- Snowflake Native App Framework Tutorial: Develop an App: https://docs.snowflake.com/en/developer-guide/native-apps/tutorials/getting-started-tutorial

- Request access to objects in a consumer account: https://docs.snowflake.com/en/developer-guide/native-apps/requesting-about

- External network access examples: https://docs.snowflake.com/en/developer-guide/external-network-access/external-network-access-examples

- Use case – Streamlit in Snowflake: https://www.inovex.de/de/blog/next-stop-insights-how-streamlit-and-snowflake-power-up-deutsche-bahn-data-adventures/

- Add frontend experience with Streamlit: https://docs.snowflake.com/en/developer-guide/native-apps/adding-streamlit#label-streamlit-unsupported-features-na

- Streamlit data editor: https://docs.streamlit.io/1.31.0/develop/api-reference/data/st.data_editor#stdata_editor