Notice:

This post is older than 5 years – the content might be outdated.

With Kubernetes 1.13, the Container Storage Interface (CSI) joined its Network (CNI) and Runtime (CRI) cousins in the effort to make Kubernetes Storage more easily extensible. It allows external storage vendors to maintain their own storage drivers independently of the Kubernetes source tree and releases. Since its introduction, the Kubernetes team has been implementing more storage-related features on top of the CSI which are now starting to become available. This article gives an overview over these emerging features.

Recap: Kubernetes Storage with CSI

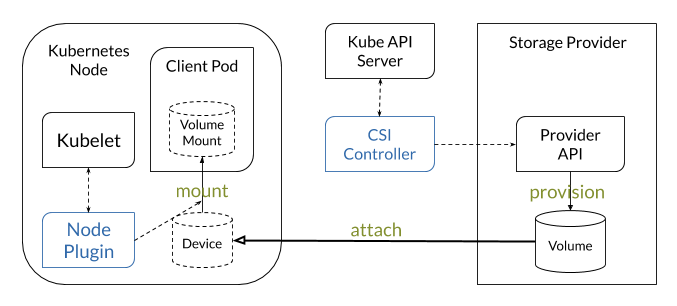

The CSI is a platform-agnostic specification, meaning that the same storage driver implementation can be used to provide storage volumes in any container orchestration platform that conforms to it. Usually the driver consists of a controller plugin, responsible for communicating with the storage provider API for the provisioning of volumes and attaching them to nodes, and a node plugin, responsible for mounting these volumes into containers on that node.

The Kubernetes implementation of the CSI consists mostly of a handful generic sidecar containers that interface between the Kubernetes API and the CSI of the controller plugin in the same pod. The kubelet in turn speaks directly to the node plugin when a pod requests a CSI volume to be mounted. Thus installing a CSI driver in Kubernetes is as easy as creating a multi-container deployment for the controller plugin and installing a daemon set for the node plugin. No further configuration of the core Kubernetes components is needed.

From a user perspective, creating a persistent volume with CSI consists of simply referencing the CSI driver in the volume spec. Using dynamic provisioning via a StorageClass resource is the recommend way, though. As the PersistentVolumeClaim only references the StorageClass and the application only needs the PVC name, the usage of CSI can be completely transparent to the application developer.

See this blog article for more detail on the CSI in Kubernetes. There is also a non-comprehensive list of CSI drivers detailing which of the following features each implements.

Ephemeral Inline Volumes

The storage providers using the CSI mainly provide persistent storage, and thus the driver support only persistent volumes. In some cases it may be useful, though, to use a CSI volume as an inline volume in a pod spec. Since these volumes are bound to the lifetime of the pod, they are called „ephemeral“ and we know types like „EmptyDir“, „ConfigMap“ and „HostPath“ which are the bread and butter of pod design.

The CSIInlineVolume feature, which is enabled since version 1.16, adds this possibilty, should the driver support it. Unfortunately it requires the driver to add functionality not covered by the CSI in order to cut the lifecycle of a CSI volume short.

From a user perspective, the CSI driver and its parameters need to be added to the pod spec’s volume section, losing the nice transparency of StorageClasses. Not many drivers support ephemeral volumes. The one I find most interesting is the ImagePopulator which allows to mount a container image as an ephemeral volume.

See this blog article for more information about this feature. It also mentions some hardware-dependent use cases.

Volume Cloning

The VolumePVCDataSource feature has been enabled since version 1.16 and reached general availability in 1.18. It allows to specify another PersistentVolumeClaim as a data source when creating a volume with a CSI driver. The new volume is then pre-populated with the content of the PersistentVolume bound to the source PVC, effectively cloning that volume.

Only PVCs that are currently not mounted by any pod can be used as a data source. Not many drivers support cloning and I think the reason for this may be, apart from possible limitations on the storage provider side, that the the same result can also be achieved with the snapshot feature.

See the documentation for further details.

Volume Snapshots

Volume snapshotting is a feature that many storage providers offer. This new Kubernetes feature allows for snapshots to be managed through the Kubernetes API just like volumes are. The feature reached beta status in version 1.17 and is thus enabled by default.

The snapshotting implementation includes these three components:

- Custom Resource Definitions that represent snapshots in the Kubernetes API.

- A generic snapshot controller that handles events from these CRDs.

- A CSI driver that implements the snapshot-related functions of the CSI.

The API objects for snapshots have been fashioned after the objects for dynamic provisioning of persistent volumes and are used in much the same way.

| Snapshots | Volumes |

| VolumeSnapshotClass | StorageClass |

| Define the driver for the snapshot and its deletion policy. | Define the driver for the volume and its type and deletion policy. |

| VolumeSnapshot | PersistentStorageClaim |

| Request a snapshot of a certain PVC using a specified VolumeSnapshotClass. Will be bound to a VolumeSnapshotContent resource. | Request for a volume of a certain size using a specified StorageClass. Will be bound to a PersistentVolume resource. |

| VolumeSnapshotContent | PersistentVolume |

| The representation of the actual snapshot object at the storage provider. Bound to a VolumeSnapshot resource. | The representation of the actual volume object at the storage provider. Bound to a PersistentVolumeClaim resource. |

So by simply creating an API object the developer triggers a snapshot creation at the storage provider. Deleting the API object will also delete the snapshot object at the storage provider (depending on the deletion policy).

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 |

apiVersion: snapshot.storage.k8s.io/v1beta1 kind: VolumeSnapshotClass metadata: name: ebs-csi driver: ebs.csi.aws.com deletionPolicy: Delete --- apiVersion: snapshot.storage.k8s.io/v1beta1 kind: VolumeSnapshot metadata: name: ebs-csi-backup spec: volumeSnapshotClassName: ebs-csi source: persistentVolumeClaimName: ebs-data |

Snapshots are often used as a backup method. Restoring a volume from a backup is achieved by using the VolumeSnapshotDataSource feature enabled since version 1.17. It allows to reference a VolumeSnapshot resource as a source in a PersistentVolumeClaim object. This will create a new volume from the specified snapshot.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 |

apiVersion: v1 kind: PersistentVolumeClaim metadata: name: ebs-restored-data spec: storageClassName: ebs-csi accessModes: - ReadWriteOnce volumeMode: Filesystem resources: requests: storage: 10Gi # Must be equal to or larger than the snapshot size. dataSource: name: ebs-csi-backup kind: VolumeSnapshot apiGroup: snapshot.storage.k8s.io |

For more information see the official snapshot documentation and also this blog article.

CSI Migration

With all this CSI goodness we should not forget the old in-tree storage plugins. With their CSI counterparts reaching maturity they will become obsolete and are slated to be removed around version 1.21. That is when the following types of manifests will stop working:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 |

apiVersion: v1 kind: Pod metadata: name: test-ebs spec: (...) volumes: - name: test-volume awsElasticBlockStore: volumeID: --- apiVersion: v1 kind: PersistentVolume metadata: name: pv0003 spec: (...) awsElasticBlockStore: volumeID: |

As seen above when talking about ephemeral CSI volumes, there is no replacement for the first pod manifest above. It will have to be migrated to use a PVC. The PersistentVolume below it can be migrated by specifying the EBS CSI driver instead.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

apiVersion: v1 kind: PersistentVolume metadata: name: pv0003 spec: (...) csi: driver: ebs.csi.aws.com volumeHandle: |

It is much easier for StorageClass resources as it should be enough to replace the value of the provisioner attribute with the name of the CSI driver, e.g. provisioner: kubernetes.io/aws-ebs becomes provisioner: ebs.csi.aws.com . The other parameters should remain the same.

To assist in a smooth transition, cluster administrators would do good to install the new CSI driver alongside the old in-tree driver while it is still available. That way application developers can migrate their code successively. This is assisted by the CSIMigration feature which has been enabled in version 1.17. It diverts all calls directed to the in-tree storage plugins over to the corresponding CSI plugin, if available. Otherwise it will fall back to the in-tree plugin. This allows cluster administrators to roll out and test the CSI driver on a subset of nodes and roll back if desired.

The CSIMigration feature needs to be enabled for the specific storage provider, though. As of 1.17 there are CSIMigrationAWS (beta), CSIMigrationGCE (beta), CSIMigrationAzureDisk (alpha), CSIMigrationAzureFile (alpha) and CSIMigrationOpenStack (alpha). See the feature gates documentation for details.

What a ride!

This has been a lot of information and I hope this little run through the Kubernetes storage world has not left your head spinning like an old hard disk. Thanks for reading! For further information, please check out our Kubernetes offerings.