Notice:

This post is older than 5 years – the content might be outdated.

CosmosDB is a global database in the Azure universe. In this blog we’ll look at its different APIs: the SDK, Entity Framework Core and the MongoDB API.

A brief overview of CosmosDB

CosmosDB, formerly known as DocumentDB, is Microsoft’s take on a globally distributed database, which automatically manages scaling and data consistency. In times of big data and the cloud these are very welcome features to gain without investing development time. As a NoSQL [1] database it uses the common structure of databases with collections known from other NoSQL databases such as MongoDB.

As a developer the interesting bit—aside from its big feature set—is that the database has multiple APIs with which it can be accessed. These include:

- SQL

- MongoDB

- Cassandra

- Azure Table

- Gremlin

Today we are going to look at the SQL and MongoDB approach before using the preview of the CosmosDB Entity Framework Core Provider.

Developing against CosmosDB with .NET Core

To get a feeling for which API suits us best we are going to compare the different APIs by using .NET Core. Meet the contenders:

Microsoft Azure Cosmos DB .NET SDK

The Microsoft Azure Cosmos DB .NET SDK [2] is currently available in version 2 and is in preview for version 3. As the primary SDK it comes with a specialized feature set tailored for use with CosmosDB. I am expecting a fairly low-level approach on interacting with different documents and collections.

Entity Framework Core

Entity Framework Core is an ORM developed by Microsoft specifically for use with .NET Core. As it happens a CosmosDB Provider is in preview and has been teased by Microsoft in some of their blogs [3]. EFCore promises to be a high-level abstraction over the database layer which would reduce the amount of code that has to be maintained in a given project.

MongoDB

CosmosDB provides MongoDB Wire Protocol Support [4]. This means that we should be able to use an existing MongoDB Driver to talk to CosmosDB. As a native way to use the NoSQL features and years of development time invested in MongoDB libraries I hope for a very smooth experience. The library we are using is the official MongoDB Driver for C# [6].

Getting Started

For a quick-start on how to get a CosmosDB up and running in Azure please see Microsoft’s documentation [5]. It should be noted that you need to decide which API you want to use the CosmosDB with at creation time.

With a CosmosDB running in the cloud, we will now explore the different APIs and how they work with fairly simple tasks. First of all we want to be able to connect to CosmosDB, create a database, create a collection and do basic read/write operations.

Connecting to CosmosDB

The CosmosDB SDK does this in a straightforward way, using the endpoint URI as well as the master (or read) key which are generated when creating CosmosDB.

|

1 |

var client = new DocumentClient(_endpoint, _key); |

Entity Framework Core uses a context which defines how to connect to the underlying database. In this example I am using the OnConfiguring Method to provide the endpoint as well as the key.

|

1 2 3 4 5 6 7 8 9 10 11 |

protected override void OnConfiguring(DbContextOptionsBuilder optionsBuilder) { base.OnConfiguring(optionsBuilder); ... optionsBuilder.UseCosmos(endpoint.ToString(), key, _options.Value.DatabaseId); } |

It should be noted that we already have to specify the database used at this point in time.

Other than the SDK and EFCore, MongoDB uses a connection string which can be easily found on the web portal. Connecting is just as easy as with the other two options.

|

1 |

var mongoClient = new MongoClient(options.Value.ConnectionString); |

Creating a Database and Collection

Creating a database might not be necessary when using existing infrastructure. But more often than not (especially during development) one has to set up a database quickly on a new instance. This chapter looks at the necessary code to do so. We will go into more depth about local development later. One detail we want to keep an eye out on is how and if we can specify the throughput measured in Requests-per-Unit (RUs) that the database or collection uses.

Let’s start with the SDK again.

|

1 2 3 4 5 6 7 8 9 |

var db = await client.CreateDatabaseAsync(new Database { Id = "MyDatabase" }, new RequestOptions { OfferThroughput = 10000}); var col = await client.CreateDocumentCollectionAsync(db.SelfLink, new DocumentCollection { Id = "MyCollection" }, new RequestOptions { OfferThroughput = 10000} ); |

Simple, clean, nice.

EFCore will create the database that we defined earlier automatically. Collections are created for each DbContext that we have. We can make sure the database and collection were created with this function:

|

1 2 3 4 5 6 7 |

using(var context=new OurContext()) { context.Database.EnsureCreated(); } |

At the time of writing it is not possible to define the size, throughput or partition key explicitly. Instead they are set by default. In future releases this should be possible as it is part of the Azure Cosmos Task List [7].

MongoDB does not know about the pricing structure of CosmosDB and does not provide a built-in way to create a CosmosDB database or a CosmosDB collection. To still allow the client to create and manage databases in code when using the Mongo API, Microsoft added custom commands [8] which can be used to achieve the desired behaviour. A naive version of creating a database with specific throughput could look like this:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

// Create Database var db = mongoClient.GetDatabase ("MyDatabase"); var commandString = "{customAction: \"CreateDatabase\", offerThroughput: 10000}"; var command = new JsonCommand<BsonDocument>(commandString); var response = db.RunCommand(command); // Create Collection commandString = "{ customAction: \"CreateCollection\", collection: \"MyCollection\", offerThroughput: 2000, shardKey: \"_id\"}"; command = new JsonCommand<BsonDocument>(commandString); response = db.RunCommand(command); |

As we can see the database name is set implicitly via the „GetDatabase“ command. Other than that we can do everything we want to do and wrapping a few commands, for example in extension methods, is very manageable.

Writing and Reading Documents

Let’s start by writing a C# class into our created collection.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

// Write class as document to db Dog pluto = new Dog("Pluto"); // As usual the Id property of a class will be filled by the database provider var plutoWithId = await client.CreateDocumentAsync(UriFactory.CreateDocumentCollectionUri("MyDatabase", "MyCollection"), pluto); // Read the same document back var plutoFromDb = await client.ReadDocumentAsync<Dog>( UriFactory.CreateDocumentUri("MyDatabase", "MyCollection", plutoWithId.Id)); |

Even though we have specific methods for each operation we need to build a proper URI for the database in every request. This can be encapsulated, for example with the repository pattern, but I’d rather have methods on the DocumentCollection class itself without needing to pass the database and collection names with every request.

In Entity Framework Core we use the usual pattern:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

// Somewhere inside the DbContext public DbSet<Dog > Dogs { get; set; } // Somewhere in code to write to the database Dog pluto = new Dog("Pluto"); dbContext.Dogs.Add(pluto); dbContext.SaveChanges(); // Reading from the database var plutoFromDb = dbContext.Dogs.Find(pluto.Id); |

In this case the benefits of using a fully grown O/RM are clearly visible. The code is very readable and easy to understand.

MongoDB for C# makes these tasks very simple as well.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

// Get collection from client. Note the difference from the SDK which used the client directly var db = client.GetDatabase("MyDatabase"); var collection = db.GetCollection<Dog>("MyCollection"); // Now insert the object Dog pluto = new Dog("Pluto"); collection.InsertOne(pluto); // Now read it back var plutoFromDb = collection.Find(x => x.Id == pluto.Id).First(); |

The example above is straightforward, but a little more verbose than the EFCore version because it does not hide the database layer from the programmer.

All approaches so far are easy to use and quick to set up. MongoDB lacks some helper utilities for creating and managing databases and collections, which is to be expected since we are not using a CosmosDB specific protocol. The benefit here is that we can use (almost) the same code for MongoDB and CosmosDB. Entity Framework Core allows us to use any relational database and CosmosDB, which might be helpful, but then—why would you mix relational and non-relational databases? Lastly it comes as no surprise that the SDK has the most granular interface and a lot more capabilities than the other two contenders. This can be a little overwhelming depending on which specific features you want to use. (Features are not shown here.)

Versioning

Versioning can always come up in the most unpredictable way but doesn’t for most of the application lifecycle. Since CosmosDB is a non-relational database it does not have a set schema, which gives us the benefit of being able to save unstructured documents. In a more rigorous environment we can regularly find a version property on documents saved in non-relational databases. This property can then be read and an appropriate action can be taken to migrate the document. Not removing properties for backwards compatibility and other tricks are used to make this work in production.

The SDK as well as MongoDB can deal with unknown and missing attributes. EFCore, which was built for relational databases, does not provide a smooth experience here. Usually you would use schema-migrations to handle changes in the database. This is not possible, due to CosmosDB not having a schema, so other solutions and workarounds have to be used. Furthermore there are no plans to support data migrations in the current implementation of the CosmosDB provider [9].

Developing Locally

On the journey so far the SDK and the MongoDB approach had little differences. EFCore was nice to use but fell short when it came to versioning (which might be due to the early preview state). So why are we checking out so many approaches to talk to CosmosDB? Developing locally!

CosmosDB comes with an emulator which can be run on Windows or Docker for Windows which is great, but developing microservices with a team that uses Windows, macOS and *nix operating systems this does not help. (Work on a cross platform emulator has started recently though [10].)

The obvious solution was to use an O/RM like EFCore to be able to swap out the database underneath for local development, which sadly did not work out. This is when MongoDB became a real option. CosmosDB can already use the Mongo Wire Protocol and only adds a couple special commands to manage databases and containers. These can be ignored when running against a local MongoDB and all is well. The gain here is great, there is no need to setup CosmosDB accounts for each developer in the cloud and try to manage those. Being able to work remotely, on a train and without access to the internet are other huge benefits.

Access Control

In more traditional databases we have privileges and rights which can be granted to users. A user or service can now log in with given credentials. In this case the database checks the credentials and grants or denies access.

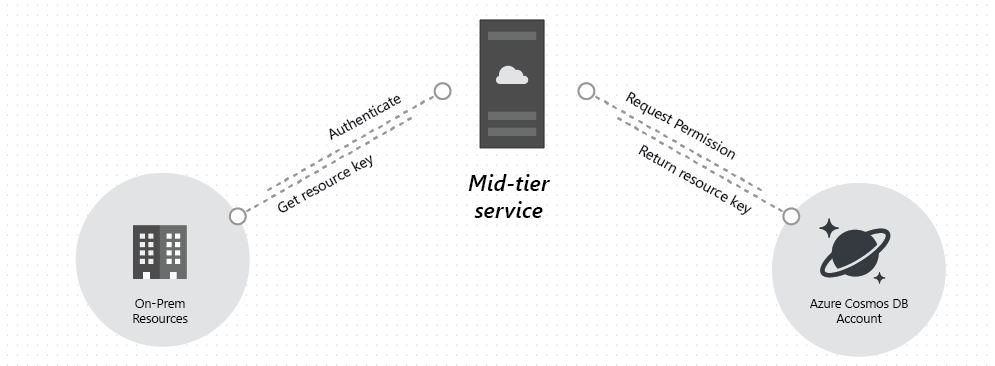

CosmosDB uses a different system. A ‘user’ in CosmosDB space can be seen as a set of permissions (rights, privileges). This set of permissions is given a resource token with which the service or user (as in person) can authenticate themselves against the database. All resource tokens have a lifetime between 10 minutes and 5 hours, which means it has to be refreshed in certain intervals. This leads to the conundrum that we need some instance which owns the primary key for the CosmosDB (which does not have a lifetime) to request new resource tokens on behalf of the service or user. The graphic below shows the suggested architecture to create and manage resource tokens.

Adding a new service for dealing with authentication against the CosmosDB can be seen as a relatively big overhead in smaller projects. An added benefit though is that mid-tier service only needs to handle resource tokens. All other communication like queries, inserts etc. are sent directly from the client to the database, authenticated by the resource token. On a last note, the token generated by CosmosDB does not hold the timestamp until which the token is valid, the application has to take care of refreshing the token if the CosmosDB denies the request. I further want to mention that Azure provides role-based access control for the CosmosDB account. These are very coarse grained permissions and not comparable to the user specific settings in traditional databases.

All APIs that I used with CosmosDB were good and the developer experience okay. I am looking forward to the release of the Linux/macOS version of the emulator to have a true CosmosDB experience with the proper SDK to use all the permission related features. Microsoft has laid a great foundation which it can build upon but needs to do so to enable all developers to work with their database.

Read on

Find out how databases are part of our backend development portfolio.

Sources

[1] https://docs.microsoft.com/de-de/azure/architecture/data-guide/big-data/non-relational-data

[2] https://github.com/Azure/azure-cosmos-dotnet-v2

[3] https://msdn.microsoft.com/en-us/magazine/mt848702.aspx

[4] https://docs.microsoft.com/de-de/azure/cosmos-db/mongodb-feature-support

[5] https://docs.microsoft.com/de-de/azure/cosmos-db/create-sql-api-dotnet

[6] https://docs.mongodb.com/ecosystem/drivers/csharp/

[7] https://github.com/aspnet/EntityFrameworkCore/issues/12086

[8] https://docs.microsoft.com/de-de/azure/cosmos-db/mongodb-custom-commands

[9] https://github.com/aspnet/EntityFrameworkCore/issues/13200

Hello, I have a doubt in the construction of my BD in Cosmos, if I have entities such as: Personal, Order, Product.

and I wanted to know if it is better to create a single container (Collection) for all entities or a container (Collection) for each entity?

Hey there,

sadly there is no go to solution for this. I personally like to keep entities in separate collections. It alleviates the issue of having to query for a type or even store type information (reading lists of entities), but there are multiple drawbacks:

1) Depending on the scenario querying entities in multiple collections can be more difficult.

2) Each collection has to have a minimum of 400 RUs assigned to it.

One workaround for this is to provision „database level throughput“ which reduces the amount of RUs to 100 per collection. (See: https://docs.microsoft.com/en-us/azure/cosmos-db/how-to-provision-database-throughput)

There also is a new autopilot feature for RUs in preview. I haven’t had a chance to test it yet though (https://docs.microsoft.com/en-us/azure/cosmos-db/provision-throughput-autopilot)

If you have any further questions feel free to ask.

Best,

Chris