Notice:

This post is older than 5 years – the content might be outdated.

This blog post investigates how deep learning models can be optimized and deployed on edge devices for parking guidance systems. I will present two different approaches I focused on in my bachelor’s thesis.

Edge or Cloud Computing?

The domain of deep learning is particularly characterized by complex models with huge amounts of parameters which require high computational resources. In contrast, the concept of the Internet of Things (IoT) comprises distributed edge devices which acquire, process and store data in a decentralized network architecture. For this reason, machine learning on the edge, i.e. the integration of machine learning models on edge nodes or embedded devices, is a challenging task that involves thinking about new techniques.

But why not simply run neural networks in the cloud? Admittedly, cloud computing offers the computational capacities we need for machine learning. However, clouds come with a number of disadvantages, especially when we think of larger scaled IoT in smart city applications: an autonomous vehicle may produce 1 GB of data per second, a smart city (assuming 1 Mio. inhabitants) is estimated to produce 180 000 TB of data per day [1]. Transmitting these huge amounts of data to clouds and data servers causes an enormous load on the network. Moreover, there will be new concerns regarding privacy and data protection when sending personal data to third-party clouds. For these reasons, it can be vital to think about shifting data processing and analytics tasks closer to the edge. We can achieve a lower latency and build real-time machine learning applications since no transmission to servers is necessary. Moreover, we can build more reliable and robust IoT applications which do not depend on the network quality or third-party clouds. However, we also have to cope with limited computational resources, low energy supply and small device storage. We will explain two approaches to build a deep learning based parking guidance system that avoids expensive sensors and simply works with a camera and a single board computer by integrating a Convolutional Neural Network (CNN) directly on the edge device.

Computer Vision-based Parking Guidance Systems

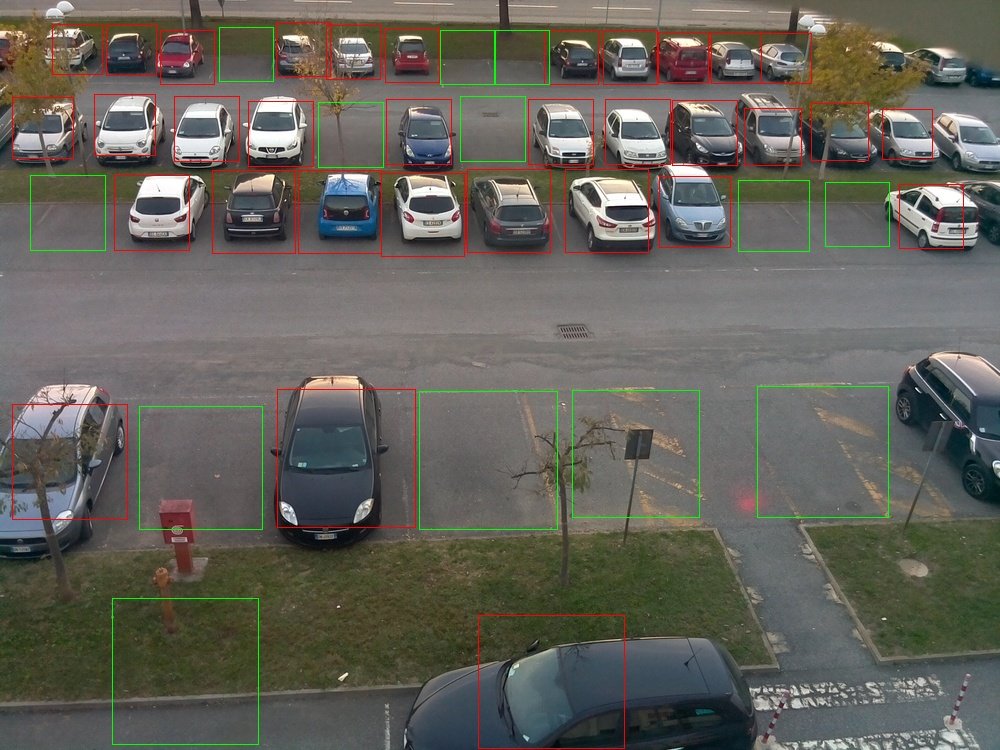

There are two main approaches which can be found in state-of-the-art deep learning based parking guidance systems. With the image classification approach, the image captured by a camera is first segmented in pre-defined individual parking lots, then a CNN is used to classify each parking lot as empty or occupied. The second approach is based on object detection where a neural network works as a car detector and counts all detected instances. The resulting models of both approaches were trained on a GPU and deployed and tested on an embedded system afterwards. We used the NVIDIA Jetson Nano to examine the applicability of the trained neural networks on the edge.

Image Classification Approach

For the image classification approach, the work by Amato et al. called „Deep Learning for Decentralized Parking Lot Occupancy Detection“ [2] was used as baseline. The authors proposed the mAlexNet architecture, a reduced version of the well-known AlexNet [3]. They managed to reduce AlexNet, comprising over 60 Mio. parameters, to under 90 000 parameters by reducing the depth of the network as well as the number of filters and neurons. Since the whole topology as well as all necessary hyperparameters are revealed in their paper, the mAlexNet can be reproduced using Keras with TensorFlow as its backend. For training and testing, two publicly available parking lot datasets, CNRPark+EXT and PKLot were used, which contain over 850 000 images of empty and occupied parking lots captured at different viewpoints and under various weather conditions. In the course of my bachelor’s thesis, I reproduced the model as well as all training and test configurations that were proposed by the authors and reached similar results.

After validation of the reproduced baseline model, we examined two optimization techniques to improve the baseline. By using regularization techniques such as Batch Normalization and Dropout, the generalization ability of the model could be improved so that it performs better on unseen parking scenarios. We could further improve the regularized model by applying Transfer Learning. This method helps to improve a model’s generalization capacity by adopting the weights learned on a much larger, but related dataset. Instead of mAlexNet, we used MobileNet V2, an architecture which is optimized for mobile devices, pre-trained on ImageNet. An excerpt of the extensive tests can be seen in the table below, showing that regularization and transfer learning help to improve the parking lot classification system.

| Training Set | Test Set | Model | F1 Score [%] |

| CNRPark+EXT (Cam 1+8) | CNRPark+EXT Test | mAlexNet | 96.00 |

| regularized mAlexNet | 92.85 | ||

| MobileNet V2 | 91.95 | ||

| PKLot | mAlexNet | 83.63 | |

| regularized mAlexNet | 91.41 | ||

| MobileNet V2 | 92.86 | ||

| CNRPark+EXT Train | CNRPark+EXT Test | mAlexNet | 97.91 |

| regularized mAlexNet | 97.01 | ||

| MobileNet V2 | 94.05 | ||

| PKLot | mAlexNet | 86.29 | |

| regularized mAlexNet | 90.52 | ||

| MobileNet V2 | 96.27 |

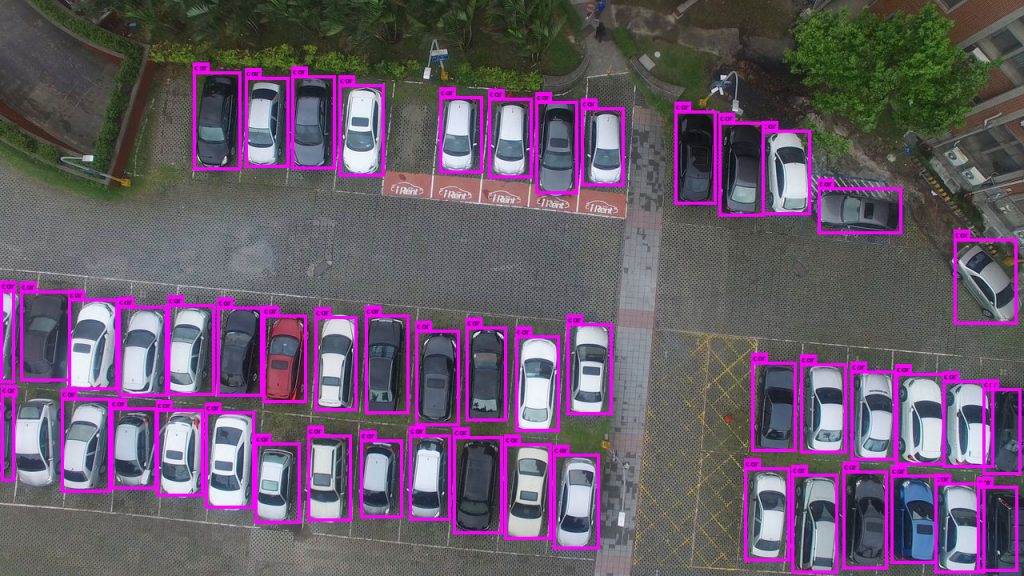

Object Detection Approach

Regarding the object detection approach, the paper „Drone-based Object Counting by Spatially Regularized Regional Proposal Network“ by Hsieh et al. [4] is used for comparison. The authors trained several state-of-the-art object detection models, for instance, Faster R-CNN and YOLOv1. They also present their own architecture, called Layout Proposal Network, which delivers the best performance in their tests. The authors use custom metrics for evaluation, the Mean Absolute Error (MAE) and the Root Mean Squared Error (RMSE), measuring the model’s counting accuracy. For training and testing, the CARPK dataset is used, comprising images of over 90 000 cars captured by a drone. Additionally, CARPK includes a subset of PKLot with suitable annotations for object detection and object counting.

Considering the constraints given by an edge device, we decided to use YOLOv3 by Redmon et al. [5] which is known for its fast yet accurate object detection algorithm. In contrast to region based algorithms like R-CNNs, YOLO divides the image into an S x S sized grid and simultaneously predicts a set of bounding boxes, confidence scores and object classes for each grid cell. The confidence score measures the objectness, i.e. the probability that an object is present in the according bounding box. During training, the model tries to minimize the errors on all three variables: location, confidence and class prediction of the object. Besides the full YOLOv3 model, there is also a reduced version for constrained environments, called Tiny YOLOv3. Apart from object counting-specific metrics, the Mean Average Precision (mAP) is calculated during training and evaluation since it is a common object detection metric, measuring a model’s accuracy.

After training and testing the two YOLO models, we tried to further improve the reduced model. Inspired by the well-known ResNet architecture [6], we added residual layers to the network which connect the input of a layer with the output of an earlier layer. Residual connections enable the model to skip layers if they are not useful for training. As a result, training becomes more dynamic and the model is easier to optimize. Having reproduced the tests by Hsieh et al., we can show that the Residual Tiny YOLOv3 model is 5 times more accurate in counting cars, as shown in the table below.

| Training Set | Test Set | Model | MAE | RMSE | mAP [%] |

| CARPK Train | CARPK Test | YOLOv1 | 48.89 | 57.55 | n/a |

| Faster R-CNN | 47.45 | 57.39 | n/a | ||

| Layout Proposal Network | 23.80 | 36.79 | n/a | ||

| YOLOv3 | 30.25 | 34.55 | 79.35 | ||

| Tiny YOLOv3 | 21.31 | 32.50 | 76.12 | ||

| Residual Tiny YOLOv3 | 5.27 | 8.26 | 93.37 |

Performance Evaluation

After training, evaluation and optimization of various models, we examined the applicability and performance of these models on the Jetson Nano board. While the baseline by Amato et al. takes about 15 seconds for segmentation and classification on a Raspberry Pi 2, the reproduced model only takes a third of the time on a Jetson Nano. Consequently, one can see that the choice of hardware is essential for machine learning on the edge. The optimized classifiers are larger and slower than the mAlexNet. Especially MobileNet is a deeper and more complex model, comprising about four times as much parameters as mAlexNet, resulting in a slower inference speed. The full YOLOv3 model could not even be executed on the edge device since the Jetson board runs out of memory. The tiny YOLOv3 models are both very fast, about 10 times faster than the best image classification model.

| Test Device | Model | Model Size [MB] | Average Inference Time [s] |

| Raspberry Pi 2 | Baseline [2] | n/a | ~15 |

| NVIDIA Jetson Nano | mAlexNet | 0.762 | 5.67 |

| regularized mAlexNet | 0.778 | 7.21 | |

| MobileNet V2 | 3.1 | 19.58 | |

| YOLOv3 | 256.2 | out of memory | |

| Tiny YOLOv3 | 35.1 | 0.48 | |

| Residual Tiny YOLOv3 | 63.3 | 0.43 |

In addition, there are several techniques for further model compression and acceleration. One of these techniques is pruning which compresses a model by removing its weights below a defined threshold. Another method is quantization that reduces the number of bits representing each weight. Pruning and quantization can be implemented using the TensorFlow Model Optimization Toolkit. By applying pruning on MobileNet V2, we were able to compress the model by factor 6, making it smaller than mAlexNet. However, the inference time stays unaffected due to the implementation of pruning in TensorFlow.

Conclusion

We were able to show that the image classification as well as the object detection approach can be leveraged to build accurate, yet fast and small models for embedded devices in a parking guidance application. Comparing the two approaches, the classifier models are easier to train, converge faster and are more robust. However, the parking lots have to be specified first for each camera. In contrast, the object detection approach does not require any pre-processing steps. Furthermore, the models are multiple times faster. However, the YOLO models tend to generalize worse and have to be trained on the application environment. In terms of optimization methods, regularization as well as transfer learning could improve the model’s generalization ability. Residual connections helped to build a model that is 30 times faster and 5 times more accurate than the baseline.

In conclusion, machine learning on the edge is a field of active research. Machine learning libraries such as TensorFlow gradually provide more optimization tools for model compression and acceleration while new edge devices are more and more optimized for AI applications. However, there are a lot of promising ideas and concepts which still need to find their way into soft- and hardware development.

Sources

[1] W. Shi, J. Cao, Q. Zhang, Y. Li, L. Xu. Edge Computing: Vision and Challenges (2016)

[2] G. Amato, F. Carrara, F. Falchi, C. Gennaro, C. Meghini, C. Vairo. Deep Learning for Decentralized Parking Lot Occupancy Detection (2016)

[3] A. Krizhevsky, I. Sutskever, G. Hinton. ImageNet Classification with Deep Convolutional Neural Networks (2012)

[4] M. Hsieh, Y. Lin, W. Hsu. Drone-based Object Counting by Spatially Regularized Regional Proposal Network (2017)

[5] J. Redmon, A. Farhadi. YOLOv3: An Incremental Improvement (2018)

[6] K. He, X. Zhang, S. Ren, J. Sun. Deep residual learning for image recognition (2015)