In this article, we take a look at text adventure games and how to enable learning agents to understand the textual description of the game state and to perform actions that progress the storyline.

Why are text adventure games a relevant object of research?

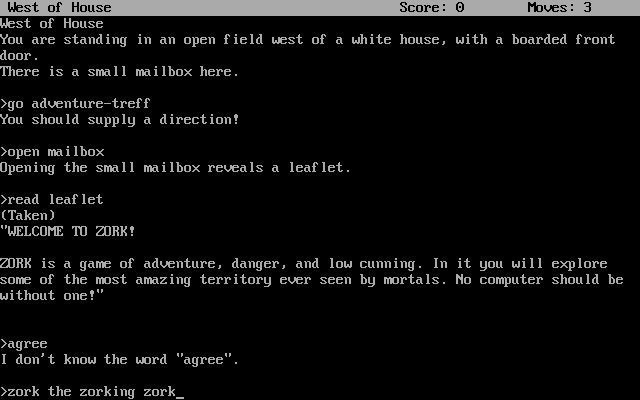

Despite enormous progress in NLP, agents pursuing their goals through natural language communication are still primitive. In contrast to most NLP datasets, text adventure games are interactive. In these games, an agent interacts with the world through natural language only. He perceives, acts upon and talks to the world using textual descriptions, commands and dialogue. These games are a partially observable simulation which means that the agent never has access to the true underlying world state. They provide the ability to study compositional inference, semantics and other high-level tasks while controlling for perceptual input. Agents reason about how to act in the world based on the incomplete textual observations of their immediate surroundings. They explore highly complex interactive situations and must complete a sequence of actions to solve structured puzzles with long-term dependencies. An agent interacts with the game environment to receive a textual observation and a scalar reward. By taking an action in natural language such as Go through the door, the player interacts with the game. This action changes the game state following a latent transition function defined by the game storyline. The objective of an RL agent is to maximize the expected cumulative discounted rewards by learning a policy.

Challenges in text adventure games

Agents in text adventure games are confronted with several challenges:

- Acting in Combinatorially-sized State-Action Spaces: To operate in Zork1, it is necessary to generate actions consisting of up to five words from a vocabulary of 697 words recognized by the game parser. Even this vocabulary leads to \(O(697^5) = 1.64 * 10^{14}\) possible actions at every step [5].

- Commonsense Reasoning: Activities in the real world can be thought of as a sequence of sub-goals in a partially observable environment and appear trivial for humans because of commonsense knowledge [5].

- Credit Assignment Problem: RL agents face the problem that rewards materialize delayed after many interactions with the environment [6].

- Exploration-Exploitation Dilemma: When agents explore their environment, they perform non-optimal actions to gather information as renouncing an immediate reward might lead to increased rewards in the long run. In contrast, exploitation represents greedy behaviour which maximizes the immediate reward based on current information. Text adventure games are typically structured as quests with high branching factors. Players must solve sequences of puzzles to advance in the world. Usually, there are multiple ways to finish a quest. To solve these puzzles, players are free in exploring new areas or revisiting previously seen locations, collecting clues and acquiring needed tools to unlock the next portion of the game [5].

- Sample Inefficiency: As a result of the credit assignment problem and sparse and time-unrelated rewards, many solutions to RL problems are sample inefficient. Agents interact multiple times with their environment in order to gain actual insights and learn patterns of their environment [7].

- Textual-SLAM: The SLAM problem refers to simultaneously localizing and constructing a map while navigating in a new environment. In the setting where the environment is described with text, this textual variant of the SLAM problem is known as the Textual-SLAM [8].

Looking at the map illustrated in Figure 2, the complexity of such games for RL agents becomes clearer.

Transformer-based language models (LMs)

As the focus of the investigation lies in Transformer-based language models, we shortly recap some characteristics. Language modeling is the task of assigning a probability to words, sequences or sentences in a language. In Masked Language Modeling (MLM), a certain percentage of words in a given sentence are masked and the model is expected to predict those masked words based on the remaining ones in that sentence. A different procedure to train an LM is Causal Language Modeling (CLM). When using the CLM training procedure, the model is only allowed to consider words that occur to its left when predicting the target word. Commonly, the MLM training routine is preferred when the goal is to learn an eminent representation of the input, whereas CLM is favored when the generation of fluent text is of greater relevance. A DistilBERT-based LM is usually trained with MLM and is a smaller BERT version. It is designed to learn appropriate representations of texts. The Feedback Transformer, on the other hand, was trained with CLM in its initial publication. It is also a model that can work efficiently with few parameters because it removes some limitations of the vanilla transformer.

Deep reinforcement relevance network (DRRN)

Prior work using Deep Q-learning has shown high capacity and scalability for handling a large state space, but most studies have used a network that generates \(|A|\) outputs simultaneously, each of which represents the value of \(Q(s, a)\) for a particular action \(a\). It is not practical to have an architecture of a size that is dependent on a large number of natural language actions. Furthermore, in text adventure games the valid action set at each time step is an unknown subset of the unbounded action space that varies over time.

He et al. [2] proposed the DRRN that consists of a pair of neural networks, one for the state text embedding and the other for action text embeddings, which are combined using a pairwise interaction function. For a given state action text pair, DRRN estimates the Q-value stepwise. At first, both state and action are embedded separately. Next, \(Q(s_t, a_t^{i})\) is approximated by use of an interaction function. Finally, for a given state the optimal action is selected among the set of valid actions. DRRN uses the experience replay strategy, with a fixed exploration policy to interact with the environment to obtain a sample trajectory. A random transition tuple is sampled from the replay buffer to compute the temporal difference error. In modifications of the DRRN, each input information is encoded with separate GRUs – e. g. a GRU encodes each valid action at every game state into a vector, which is concatenated with the encoded observation vector. With the use of this combined vector, DRRN computes a Q-value for a state-action pair, estimating the expected total discounted reward if this action is taken and the policy is followed thereafter. This procedure is repeated for each valid action. DRRN selects the action by sampling from the softmax distribution of those Q-values.

Approach

The architecture of my approach is illustrated in Figure 3. It combines the DistilBERT or the Feedback Transformer with the DRRN and relies heavily on Singh et al. [3]. Both transformers are trained as language models in a two-step procedure. First, the models are pre-trained on Wikipedia to incorporate commonsense knowledge. Next, we fine-tune them on game trajectories from JerichoWorld and ClubFloyd to inject game sense. JerichoWorld was created by a walkthrough agent that knows the optimal actions, in combination with random ones. Therefore, the dataset represents a stronger game sense than ClubFloyd, which are game transcripts from humans. Our investigation follows a data-centric and a model-based comparison. We contrast the overall model when a DistilBERT is fine-tuned on ClubFloyd with one fine-tuned on JerichoWorld. Further, we fine-tune the Feedback Transformer on ClubFloyd only.

The states in the game consist of observations (\(o_t\)) concatenated with the player`s items (\(i_t\)) and its current location description (\(l_t\)) provided by the game engine using the command’s inventory and look. All of the information is tokenized by a WordPiece tokenizer and the tokenized state pieces of information are encoded separately with the respective LM model. To be more precise, a single instance of a fine-tuned DistilBERT or Feedback Transformer is used. First, the pre-trained LM is initialized with the weights trained during the CLM training procedure or the MLM procedure respectively. The GRU is initialized with random weights. Each individual vectorized output of the respective LM serves as input to the respective GRU encoder. The outputs of the state information are concatenated and cached. Then, each valid action is tokenized and encoded with the same procedure and concatenated afterward to the state information. On top, the DRRN algorithm calculates the overall Q-value for each state-action pair individually.

The RL agent is trained and evaluated on five games with different difficulty levels using the Jericho framework and the same handicaps as in the DRRN baseline. The valid action handicap is used for all training runs, which means the framework tells our agent which action changes the game state. Following this procedure, the respective LM is able to transfer its prior knowledge about the world to the agent. The agent, in turn, learns more informed policies on the top of the LM optimized for specific games. A single instance of the trained LM is used without fine-tuning further for specific games to keep the LM general and adaptable to any RL agent or game. In order to determine Q-values in a single state for different actions \(a_i\), multiple forward passes of the network function \(\theta(s_i, a_i)\) are needed. To compute multiple \(Q(s, a_i)\) values effectively, a single partial state forward pass is performed and the encoding of the state is cached. Then, a partial action forward pass is performed for each action and the respective Q-value is computed using the cached encoding of the state. DRRN is trained using tuples \((o_t, a_t, r_t, o_{t+1})\) sampled from a prioritized experience replay buffer with the TD loss:

\(L_{TD}(\phi) = (r_t + \gamma \: max_{a \in A} \: Q_{\phi}(o_{t+1}, a) – Q_{\phi}(o_{t}, a_t))^2,\)

where \(\phi\) represents the overall DRRN parameters, \(r_t\) is the reward at the \(t\)-th time step and \(\gamma\) is the discount factor. The next action is chosen by softmax sampling the predicted Q-values.

Results

We first look at the game performance and contrast the three models. The average of scores on the last 100 finished episodes are reported as the score on a game run. We further look at the maximum seen score. We then look at evidence that points to a semantic understanding of the models.

Gameplay

Looking at Figure 4 and Figure 5, the Feedback Transformer-based model trains slower than the DistilBERT-based ones. The agent equipped with the LM fine-tuned on ClubFloyd trains fastest and achieves the highest average score on the last 100 episodes. However, the Feedback Transformer aids the agent significantly as the same max score is observed and the “Last 100 Episode“ score is only about 6 lower.

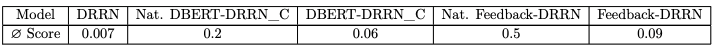

All three models can compete with state-of-the-art models in 5 different games, as Table 1 shows. This also verifies that an LM not further fine-tuned to specific game dynamics can generalize to different games. In all cases, the RL agent is trained for the individual game. Even though the Feedback Transformer as backbone LM was initially trained to generate language, it incorporates language understanding to assist artificial agents in playing text adventure games.

Semantic understanding

Yao et al. [4] showed that the TD Loss and valid action handicap setup with the DRRN algorithm can lead to overfitting and memorization when the reward is mostly deterministic. They hypothesize that this could hinder the actual goal of understanding semantics and taking natural language actions. To further investigate this hypothesis, the Feedback-DRRN is evaluated in the following way: A training run is performed for Zork1 in the same way as before. This time, however, the evaluation is conducted on Zork3. This way, we verify whether the agent can transfer his learned knowledge from one game to another. Moreover, he has no opportunity to learn game trajectories by memorization but has to understand and connect the semantics of the two games. For comparison, another agent with exactly the same architecture is trained and evaluated on Zork3. Both agents are compared with the average episode score of over 200 episodes. Additionally, these are compared with DBERT-DRRN-CF and the vanilla DRRN that are trained and evaluated in the same procedure.

As can be seen in Table 2, for both models the natively trained agent outscores the transferring models. The native DBERT-DRRN-CF outscores the transferring one by 333 \%. For Feedback-DRRN the difference amounts to even 555 \%. However, both transferring models significantly outscore the native vanilla DRRN by factor ten. This is likely because instead of starting with small random weights, the agent has a prior sense of how Zork1 worked in terms of higher weights. So to some extent, instead of fully exploiting the pre-trained knowledge, the agent first has to forget information about the game, making the task even more difficult. Nevertheless, the agents show abilities to understand semantics and transfer knowledge. A possible explanation might be the similarity of the games and a high percentage of shared tokens. Therefore, agents with this architectural setup do not only profit from the DRRN agent with the valid action handicap.

Table 3 shows three indicators that are indicative of the extent to which the RL agent’s understanding of language is developed. Overall, DBERT-DRRN-CF performs the fewest navigational commands, followed by DBERT-DRRN-JW and Feedback-DRRN. However, all three models navigate less frequently in percentage terms with ongoing training progress. The Feedback-DRRN has the fewest repetitions of actions. The normalized MAD does not strictly increase for all three models. Especially the Feedback-DRRN does not seem to be more conscious in later steps. For the DBERT-DRRN-based models, a small improvement can be observed. A clear identification of whether the agent is more confident in chosen actions due to the LM or the DRRN is difficult in this way. On the other hand, as the training progresses, all three models opt more often for the same action recommended by the walkthrough agent. All other indicators decrease almost consistently during the training, showing that the agent is sufficiently reinforced to better understand the observation and take actions accordingly.

Conclusion and takeaways

This article looked at different transformer-based language models trained in different routines to aid an RL agent in playing text adventures. We found that the RL agent was equipped with a fundamental semantic understanding, even though the backbone LM was intentionally trained for language generation. The language model provides various benefits to the RL agent: linguistic, world and game sense priors. The approach aims to draw the attention of research to a joint consideration of language comprehension and formulation. Better priors in pre-trained language models as backbone might also be beneficial to RL agents. This is because it has been observed that language understanding agents that use strong language models can produce agents with more powerful architectures.

Citations

[1] Zork image

[2] He et al.

[3] Singh et al.

[4] Yao et al.

[6] Alipov et al.

[7] Lee et al.

[8] Aulinas et al.