Over the last few years, there has been a lot of movement in the backend scene. A lot of programming languages and frameworks gained people’s interest and mine too. So I decided to write this article about languages and frameworks I have stumbled upon and wanted to compare.

Back in July 2022 when I started at inovex, I only knew two main programming languages: C# and Python. Since I worked for a few years with both languages and corresponding frameworks like .NET, Flask, and FastAPI to build RESTful services I have seen a lot of nice features, but also seen the downsides.

Besides my projects at inovex, I got the opportunity to learn a lot of new stuff. At first glance, I decided to learn Go as a programming language, since I wanted to do it for a long time. In December 2022 started a new Advent of Code season, where I did some tasks in Go but also stumbled upon Kotlin as a programming language. So after a whole day of work, I decided to learn a bit of Kotlin as well via the learning path in the JetBrains Academy. But there was still something more in my mind: What about Rust? Between Christmas and New Year’s Eve, I worked myself through the Rust Book as well as a little of the Comprehensive Rust course developed by the Android Team at Google.

I guess you can see a pattern here: I like to learn new stuff and want to create little RESTful services in order to get a feeling of the language and at least one popular framework. But after doing all this, I came up with a question: How do they perform against each other? I hope this article will give you at least a little insight!

Disclaimer

This will not be a scientific test, this article is more about getting a feeling for a rough performance indicator for the combination of programming language and framework since I am not an expert on all the used languages/frameworks. This article is also about my own opinion and insight during these tests, results may differ if you run the benchmarks on your own or use other toolings.

Prerequisites for comparing the languages/frameworks

Before starting with the benchmark comparing Rust vs. Go vs. C# vs. Kotlin, I needed to set some prerequisites and answer some questions for myself:

- What are the key indicators I want to know about?

- How can I guarantee the same environment and behavior for each service?

- What tool do I use to measure the key indicators?

- What should I do with the results? (I guess you are reading the answer already)

Key indicators

For the indicators, I wanted to focus on just a few things: requests per second and average response time. Sound not too much at first, but I wanted to know something else: What about CPU and memory consumption? From an ecological and economical point of view this was my main point of interest: If I have a service, which consumes less CPU and memory than the others, that way I can run more services of the same type (and size) on one single instance of hardware and maybe even faster and more efficiently.

Environment and behavior

In order to make my results more comparable, I decided to use Docker for the services, since I know Docker quite well. So I built every single service with a multistep Dockerfile, where the services are built in the first step and then executed on the same base image. But here I have to add a little remark: In order to run the services written in Kotlin and C#, I had to install the corresponding runtimes for Java and .NET on top of the base image. I also did set resource limits on the containers to make sure that every service gets the same amount of resources during the tests and to prevent a potential overload of the docker host.

The key specs for the docker images:

- debian:11.6-slim as the base image

- Limited to 4 vCPUs

- Limited to 1GB of memory usage

- All containers keep running during the tests

For the hardware, I have created an instance in our own inovex Cloud which I can use to build and run my containers on.

The specs for the instance are:

- Ubuntu Server 22.04 as the host operating system

- Docker Engine v.20.10.22

- 4 vCPUs

- 12GB RAM

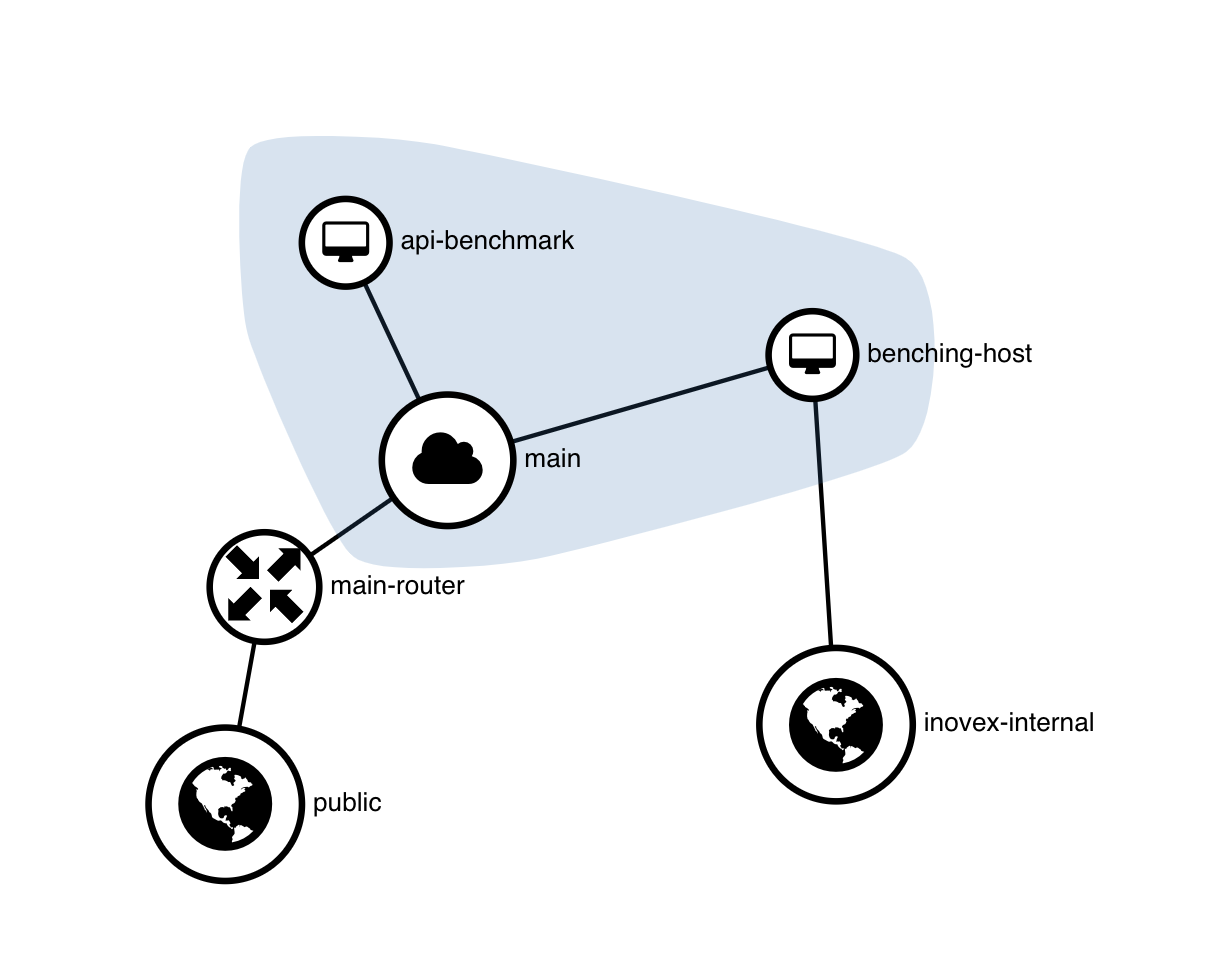

I have also created a second instance in the same subnet as the main instance so that I can also (mostly) eliminate network latency and hops. This machine acts as my client, which performs the load test on the main instance.

The specs for the client instance are:

- Ubuntu Server 22.04 as the base operating system

- 4 vCPUs

- 8GB RAM

The network topology for both instances looks like this:

Both the benchmark and client instance are in the same subnet connected via gigabit ethernet interfaces and a < 1ms latency, whereas the client machine (benching-host) is also connected to the inovex internal network so I can connect via VPN to the machine. The benchmark instance (api-benchmark) has a public IP address and router, so I can build the corresponding docker images remotely from my machine on the host itself to make it more reproducible.

Next up I decided that each service gets 3 REST routes:

- The first route is a basic GET, which returns a JSON list of 3 items that are declared inside the application. This is used to eliminate other uncertainties like external databases of file system access. It will be the first route we are running our benchmark against and will also be our comparison to the other services to see that they behave the same.

- The next route is a POST, which takes no arguments or request body at all. I will let the service sleep a fixed amount of time (300 ms) to block the request for a little while and test the concurrent handling of requests inside the HTTP handler/framework.

- The third route is again a GET, functioning as a health check for a little UI I have written in order to see if all services are up and running.

For monitoring the CPU and memory usage, I am using the built-in command docker stats from the docker daemon itself, since it provides the most accurate and fastest metrics during the load test while keeping the overhead of monitoring quite low.

Last but not least, I had to choose a load testing tool that provides the measurements for the requests per second as well as the average response time. After a bit of research, I decided to use go-wrk as my tool of choice.

Overview of languages and frameworks

| Language / Version | Framework / Version | Why I used it |

|---|---|---|

| Rust / 1.66 | Rocket / 0.5.0-rc.2 | Was the first result when I searched for a backend framework in Rust which seemed easy to use |

| Go / 1.19.4 | Echo / 4.10.0 | Easy to use, uses the HTTP handler from stdlib |

| C# / 11.0 | .NET Web API / 7.0 | Microsoft's go-to standard to create Web APIs |

| C# / 11.0 | .NET Minimal API / 7.0 | Microsoft's new approach of creating APIs with less boilerplate code and more performance |

| Kotlin / 1.8.0 | Ktor / 2.2.1 | As a JetBrains advocate I wanted to test JetBrains' approach of a web framework based on Kotlin and Coroutines |

| Kotlin / 1.8.0 | Spring Boot / 3.0.1 | The "modern" all-time classic creating APIs with modern Kotlin language features |

If you want to try it yourself or want to have a closer look at the source code, you can find all of the demo applications, as well as the UI and docker-compose file inside this repository: https://github.com/timoyo93/common-api-benchmarks

Testing scenario

As mentioned above, I used go-wrk as my benchmarking tool. It is a simple CLI tool that you can pass some arguments for testing. For both of my scenarios, I used the following arguments:

- 50 concurrent connections

- 60 seconds test duration

- Request timeout after 10 seconds

During testing, I am writing the stats of the container where the test is performed on a local file, which I used to calculate the average CPU and memory usage based on the sum of all entries divided by the number of entries.

Also, after every test run I wait for 5 minutes and have a look at the CPU and memory usage again to see if there is a memory leak present or not. In the results, it is called Memory Usage (cooldown).

Enough of talking, let’s show some results!

Results

First run -> GET /employees

| Language / Framework | Total requests | Requests per second | Average response time | Fastes request | Slowest request | Number of timeouts |

|---|---|---|---|---|---|---|

| Rust / Rocket | 1069278 | 17927.63 | 2.79ms | 388.81µs | 29.94ms | 0 |

| Go / Echo | 1084515 | 18178.04 | 2.75ms | 354.54µs | 32.90ms | 0 |

| C# / .NET Web API | 876431 | 14676.06 | 3.41ms | 458.88µs | 60.42ms | 0 |

| C# / .NET Minimal API | 936929 | 15699.06 | 3.18ms | 402.89µs | 74.22ms | 0 |

| Kotlin / Ktor | 349672 | 5835.44 | 8.50ms | 474.20µs | 202.46ms | 0 |

| Kotlin / Spring Boot | 457694 | 7647.17 | 6.54ms | 613.95µs | 370.16ms | 0 |

| Language / Framework | CPU usage (idle) | CPU usage (average) | CPU usage (peak) | Memory usage (idle) | Memory usage (average) | Memory usage (peak) | Memory usage (cooldown) |

|---|---|---|---|---|---|---|---|

| Rust / Rocket | 0.00% | 62.84% | 65.73% | 0.92MB | 3.04MB | 3.20MB | 2.73MB |

| Go / Echo | 0.00% | 60.20% | 66.07% | 2.02MB | 8.62MB | 9.29MB | 5.15MB |

| C# / .NET Web API | 0.01% | 64.24% | 79.38% | 41.96MB | 90.60MB | 98.57MB | 90.12MB |

| C# / .NET Minimal API | 0.01% | 53.06% | 58.15% | 41.09MB | 86.21MB | 87.14MB | 86.59MB |

| Kotlin / Ktor | 0.03% | 56.80% | 80.40% | 88.16MB | 122.15MB | 126.80MB | 122.20MB |

| Kotlin / Spring Boot | 0.05% | 77.85% | 97.42% | 106.10MB | 176.78MB | 193.90MB | 178.00MB |

So the first run was a success. All services were capable of handling the requests while responding with „OK“ for the health check. But as we can see, both services written in Kotlin and the corresponding frameworks had the highest peak in CPU usage and consumed the most memory.

We can also see that the services written in Go and Rust are the most „lightweight“ of all services and were able to handle the most requests per second compared to the other services.

But this was just the warm-up, now let’s get to the real part.

Second run -> POST /employees

| Language / Framework | Total requests | Requests per second | Average response time | Fastes request | Slowest request | Number of timeouts |

|---|---|---|---|---|---|---|

| Rust / Rocket | 9917 | 165.00 | 303.02ms | 300.76ms | 317.02ms | 0 |

| Go / Echo | 9950 | 165.30 | 302.48ms | 300.54ms | 311.17ms | 0 |

| C# / .NET Web API | 9950 | 165.45 | 302.20ms | 295.72ms* | 370.72ms | 0 |

| C# / .NET Minimal API | 9953 | 165.45 | 302.20ms | 295.97ms* | 324.31ms | 0 |

| Kotlin / Ktor | 9900 | 164.51 | 303.93ms | 300.89ms | 455.94ms | 0 |

| Kotlin / Spring Boot | 9950 | 165.30 | 302.48ms | 300.77ms | 397.33ms | 0 |

* The delay/sleep command in C# is not as accurate as in other languages because it uses the OS’s time resolution, therefore the fastest request was below 300ms. Since it is just around 4-5ms, I will let that count

| Language / Framework | CPU usage (idle) | CPU usage (average) | CPU usage (peak) | Memory usage (idle) | Memory usage (average) | Memory usage (peak) | Memory usage (cooldown) |

|---|---|---|---|---|---|---|---|

| Rust / Rocket | 0.00% | 0.87% | 1.22% | 2.73MB | 5.12MB | 5.70MB | 5.04MB |

| Go / Echo | 0.00% | 0.87% | 1.07% | 4.29MB | 8.54MB | 9.13MB | 5.93MB |

| C# / .NET Web API | 0.00% | 2.34% | 2.84% | 90.68MB | 93.33MB | 93.62MB | 93.52MB |

| C# / .NET Minimal API | 0.00% | 2.37% | 4.52% | 89.50MB | 91.67MB | 91.77MB | 91.45MB |

| Kotlin / Ktor | 0.04% | 4.30% | 18.50% | 122.30MB | 132.87MB | 134.20MB | 133.80MB |

| Kotlin / Spring Boot | 0.06% | 3.45% | 20.43% | 178.10MB | 187.74MB | 189.20MB | 184.80MB |

This run was also a success but we cannot see much of a difference here, since we specified each service to wait 300ms on each request asynchronously. If you do the math right, there are a maximum of 10.000 requests possible in 60 seconds and around 167 requests per second (with 50 connections).

That means that all services were able to handle around 98 % of the requests in total. Overall my insight is that all frameworks are handling concurrency very well, but Kotlin and the Ktor and Spring Boot frameworks are still the ones using most of the resources (CPU and memory).

Conclusion

So this is it. If I had to nominate a winner, I think I would pick Go and the Echo Framework here, since it was the fastest service during testing, handles concurrency pretty well with the http handler from the stdlib (which the Echo framework is using), and also had quite low CPU and memory usage.

But would I say that all the other frameworks are bad? Definitely NOT.

I had quite a blast coding all of the services in each programming language and framework. And at the end of the day, it depends on personal preference and project or company-wide preferences or standards.

Personally, I made quite a move from C# and .NET into the Go and Rust ecosystem and quite enjoy it! I am looking forward to using Rust and Go more often in the future. But that does not mean that I will quit C# and .NET since it was my first experience in professional software development and I still like and use it.

I also hope that you have enjoyed reading this article. And keep your eyes open on either the repository or this blog, maybe there will be a second part.

Nice article, I have a few questions / points, though:

– Why does the

kotlin-springdirectory contain Java source code?– A (technical) explanation / interpretation of results would be nice. I’m not really familiar with Rust, does Rust reuse the created objects (if so, the comparison would be unfair to a certain degree) or is the garbage collection more efficient (compared to the other languages)? What is the explanation for the low memory footprint?

– Would be interesting to see, what could be done by the developer to increase performance in each language / framework. For instance, in Kotlin one could use

arrayOf()instead oflistOf()which should be faster and increase throughput.First of all thanks for your feedback! I will answer the questions in the same order as asked:

– I think the only Java source code used is the Thread.Sleep command, since I couldn’t find another sleep function that works in combination with Kotlin and Spring Boot but please correct me if there is a better way doing this

– Rust does not use garbage collection, therefore the allocated memory get’s freed immediately once the objects get out of scope which also explains the low memory footprint

– I like this idea, maybe I can do a followup on this in the future

next time, please add nodejs to the mix, if you don’t mind..

I will keep that in mind, thanks for the suggestion!

Regarding the Rust framework choice, I’d say you picked one of the less efficient ones. See here: https://www.techempower.com/benchmarks/#section=data-r21

Actix-web or axum are fairly easy to use and are far way more performant. Nice article!

Thanks for your suggestion and feedback! I will definitely have a look at the other frameworks in the future.

You used framework which isn’t maintained, I’m talking about Rocket. This can be easily seen on their website where it says „Latest Release: 0.5.0-rc.2 (May 09, 2022)“, and also on https://github.com/flosse/rust-web-framework-comparison where it is in the outdated table.

Thanks for the information. As I mentioned in my article, Rocket was the first framework I stumbled upon when I searched for a backend framework for Rust. Since it seemed very easy to use, I gave it a try. In future I will have a look at frameworks which are maintained.

Your reviews lack objectivity as they include some poor choices.

Thanks for your feedback! Could you tell me with which choices you don’t agree or what could have been done better?

„Adding Java would also be great. And also subject them to high workloads.“

I will keep that in mind for the next time. Thanks for your feedback!

Minor correction: Ctor should be spelled as Ktor

Great article and a conclusion I also had in many years of backend-development. All current frameworks and languages are great. It is hard to make a „wrong“ choice. Java/Kotlin might have the largest memory / cpu usage but your team might consist of Java developers. So it still would be a great choice for such a team.

And it is great to see that the current .NET versions are also up to the task.

Of course, as a service provider you might wanna have the lowest possible footprint to utilize as much as small VMs as possible – but for many application developers this could almost be considered an „edge case“ IMHO.

I’m also learning GO at the moment and think it is really refreshing 🙂

Thank you very much for your feedback! I really appreciate that 🙂

Nice article ! I would love to do everything in perl despite the market momentum leaning towards Python and Go.

The choice of language really is a problem as we want to learn one language that fits all the bills. In reality, this never happens. Strangely, whether anyone likes it or not, perl is the best language ever created. The productivity you get with perl is really awesome. The trade-off is performance, more memory usage and lack of new modules for certain tasks. (Chat-J and online converters can improve this). If you can live this trade-off, Mojolicious is the best web framework out there that can make you happy since you could develop any backend service within the shortest time. Also, your expression matters as you could represent a lot in least possible lines. If your team could follow some good coding practices, perl can be very rewarding. Scale up is needed only if you grow with time and there are many approaches to address scale up.

Thanks for the article,

Enjoy using rust memory management in blockchain dynasty.

They are all great. You can’t buy a machine with only 10MB of ram, you will have to get one with at least 500MB anyways, so all frameworks are good. I understand if you scale it even more, it starts to matter. But in most cases it’s worth having a proper framework like .net or spring instead of jumping through the hoops of golang or rust.