FONIC Improving Search Functions Using AI: Using a RAG Application to Respond to Customer Queries

For many years, Telefónica and inovex have been working on the (further) development of the customer portal for the FONIC mobile communications brand. This collaboration focuses on user satisfaction and on the continuous improvement of the portal in terms of both technology and user experience. Through the implementation of innovative technologies, such as generative AI, FONIC aims to provide its customers with a better experience and thus to position itself clearly in the market.

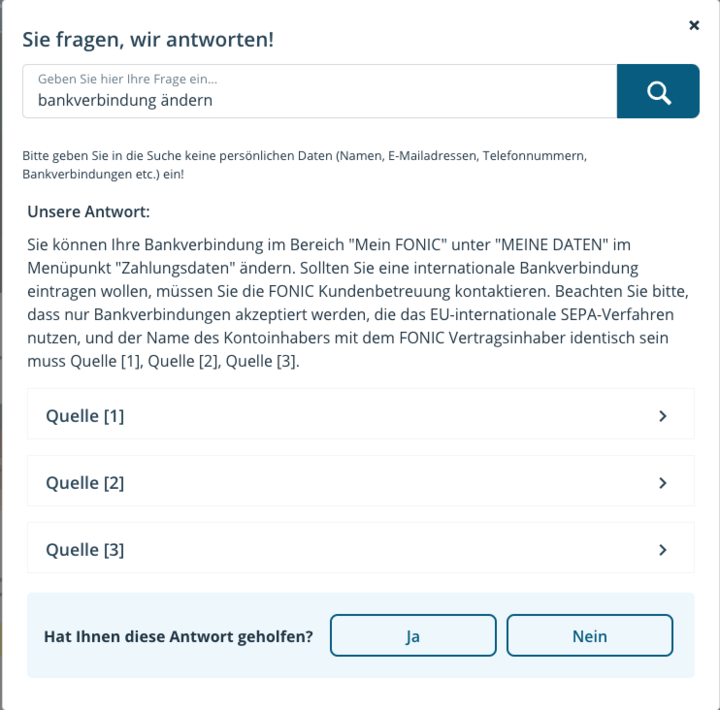

The company is therefore working with inovex to replace FONIC’s previous FAQ system with a RAG (Retrieval Augmented Generation) solution. This enables users to enter their questions directly into a chat window and receive precisely tailored answers. As a result, customers achieve their search goals ten times faster. At the same time, the system recognises when information for specific responses is missing, allowing this to be subsequently added. Compared to the previous FAQ solution, the AI-supported RAG tool provides more precise responses, reduces user effort, and simplifies the maintenance and expansion of the stored information.

Motivation and Status Quo

Complex processes in mobile communications portals can raise questions for customers. The FONIC portal therefore provides a FAQ system which suggests answers and further questions based on the information entered by users. To do this, it refers to information stored in a tree structure, meaning that users access the information they need via several sub-steps.

An extensive study of users’ interactions with the existing question-and-answer system revealed that users begin the process by entering short keywords or phrases. The system responds with suggestions in order to answer detailed questions. The data from these interactions showed that, on average, users need just over two interactions to receive a satisfactory response or to terminate the process.

As a result, around 4,000 customers per month receive no clear response. Many of these then contact customer support – an option which involves unnecessary extra work for both the customers and FONIC.

Identifying Information Sources

In order to simplify the existing processes and make them more user-friendly, inovex and FONIC have identified four different sources of information pertaining to FONIC’s products and processes.

The most obvious sources are the FAQ pages, which already provide question-and-answer information on many topics. Their FAQ format means that these documents form the perfect basis for a RAG application.

The second source is the process knowledge which is stored in the current system’s tree structure. It contains help information, instructions, and solutions to problems pertaining to the customer portal. In order to use this information for a RAG application, several processing steps were necessary. Tree traversal enabled all the possible interaction strings to be extracted. These were then summarised into core statements using GPT-4o and reformulated as instructions. The resulting documents form part of the RAG application’s knowledge base.

Additional information sources are SEO and information pages (HTML) and documents which are provided as PDF files, such as T&Cs and price lists.

Technical Implementation

FONIC’s aim was to respond to queries more quickly and accurately. GPT-4o proved to be the best model to meet these requirements, as it provides better responses than the company’s previous FAQ system in less than three seconds.

The RAG pipelines were implemented using the Haystack 2 framework. This framework was chosen as it already offers a large number of predefined interfaces to Azure, allowing services such as Open AI models and ElasticSearch to be used. When it came to implementing the document search function, a hybrid method was used which combines the BM25 implementation of Haystack with an embedding-based approach.

The user query, together with the best documents found, is ultimately added to a prompt template using Jinja2 and transferred to GPT-4o.

A/B Test Yields Positive Results

In order to measure the performance of the new solution, FONIC subjected both systems to A/B testing. The initial results showed that, on average, customers need ten times fewer interactions with the new system than with the old one to receive answers to their questions. Compared to those provided by the old system, the responses provided by the new GPT solution were rated as helpful by twice as many users.

At the same time, the new system enables the quality of the responses to be successively improved. Evaluating the responses revealed that approximately 20 per cent of questions could not be answered due to missing information. This information was subsequently provided by adding new documents in ElasticSearch, thus further improving the response quality.

Compared to the old FAQ solution, the AI-supported RAG tool provides better responses, reduces user effort, and facilitates the maintenance and expansion of the stored information.

🗓️ Appointments available

Book your initial consultation - free of charge and without obligation.

Yaren Sahin

Account Manager New Business